A diverse group of researchers from Nvidia, Stanford, Caltech, and other institutions deployed NitroGen. Jim Fan, Nvidia's AI director and distinguished scientist, touted NitroGen in a LinkedIn post on Friday as an “open source foundational model trained to play over 1000 games.” However, its impact is much broader, extending from the gaming world to the real world, with significant benefits for simulation and robotics.

This research can be said to present an attempt to extract “GPT for action.” So this is a kind of breakthrough for LLM, and it extends this proven large-scale training technique beyond the fields of languages and computer vision. Moreover, “the pioneering construction of generally competent embodied agents capable of operating in unknown environments has long been considered the holy grail of AI research,” they claim in the research paper's introduction.

Interestingly, NitroGen is based on the GROOT N1.5 architecture, which was originally designed for robotics. And its application within the gaming world shows the potential to bring great benefits back to robots working in diverse and unpredictable environments.

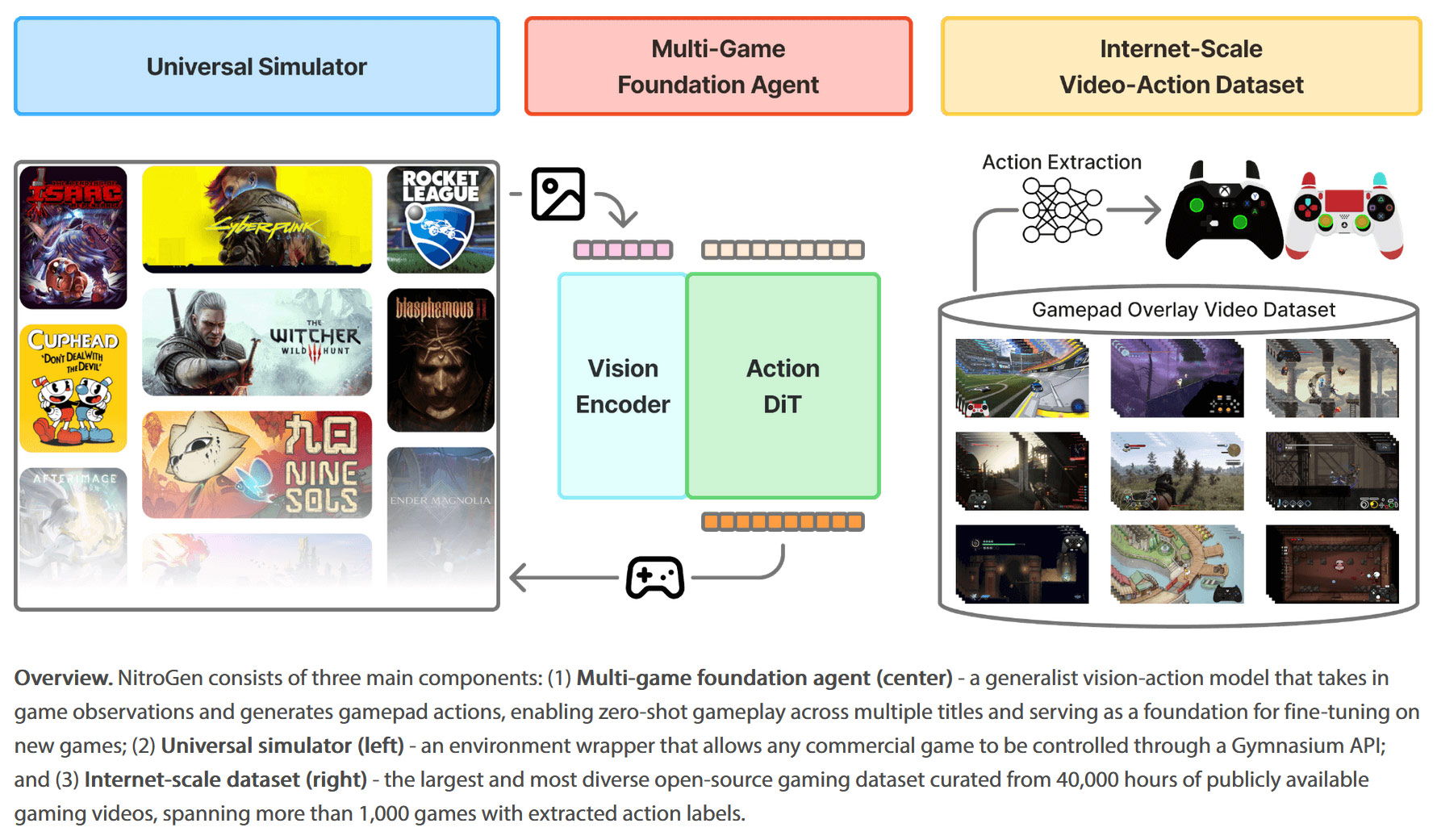

NitroGen has been adapted to play games packed with very different mechanics and physics. This is the nature and fun of video games. The researchers used more than 40,000 hours of public gameplay videos shared by streamers. Particularly helpful were videos in which gamers overlaid real-time gamepad interactions onto the stream.

In testing, NitroGen “succeeded in a variety of games, including RPGs, platformers, battle royale, racing, 2D, and 3D,” enthuses fans. While the results are promising, NVIDIA scientists say this is just the beginning and there's still a big mountain to climb.

This first version of NitroGen intentionally focuses on high-speed motor control, or as fans call it, “gamer instinct.” According to the research shared, the new LLM also has “strong capabilities across diverse domains,” allowing the model to work in procedurally generated worlds as well as unseen games, with “a 52% relative increase in task success compared to models trained from scratch.”

All of NitroGen's previous research has been open sourced, and anyone interested in gaming, robotics, or LLMs is encouraged to explore it. Pre-trained model weights, entire action datasets, and code tweaks can all be done with a combination of fancy and messy numbers.

to follow Tom's Hardware on Google Newsor Add us as a preferred sourceget the latest news, analysis, and reviews in your feed.