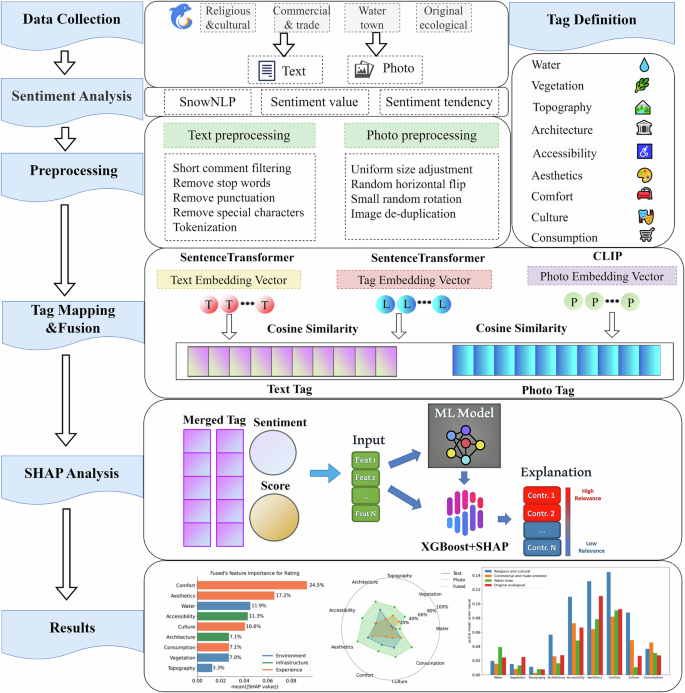

This study collects text and image review data on traditional towns in China from Ctrip. After data preprocessing, a two-level tagging system is established. By converting text and images into vectors, cosine similarity is calculated to perform tag mapping. To fully utilize multimodal information, tag data from text and images are further fused. XGBoost and SHAP are used to analyze the impact of different tags on sentiment values and ratings. This quantifies the importance of various features in tourist evaluations. The research framework of this study is shown in Fig. 2.

Research framework diagram.

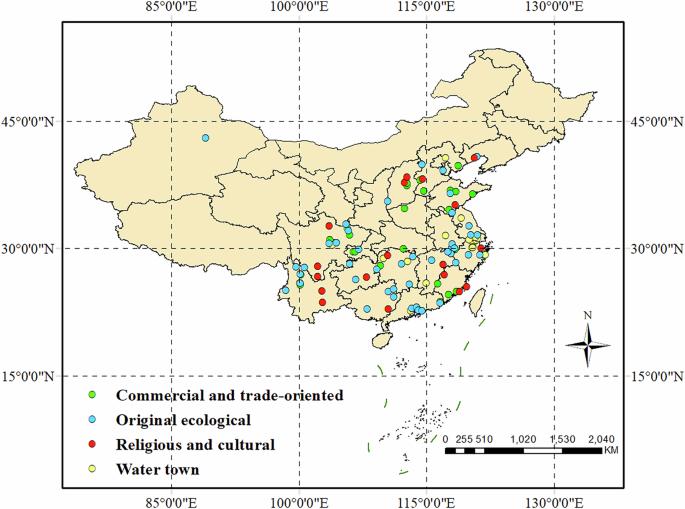

Study area and data

China has many traditional towns with long histories and diverse styles. They carry rich cultural heritage and distinctive landscape features, making them an ideal case for exploring tourist perceptions and the multidimensional characteristics of traditional towns. Ctrip (https://www.ctrip.com), one of China’s most widely used travel booking and review platforms, has accumulated a large volume of authentic tourist feedback. It provides a reliable data source for this study. This study collects tourist review data on traditional towns from Ctrip, covering the period from January 1, 2015, to January 31, 2025. The dataset includes both textual reviews and user-uploaded images, enabling a multimodal representation of visitor perceptions. To ensure data representativeness, we included only traditional towns with at least 40 reviews. As a result, we obtained valid data from 114 traditional towns. To ensure the scientific validity and accuracy of traditional town classification, the study invited eight university researchers in the field of landscape design to review and verify the classifications. Based on their geographical environment, cultural background, and functional characteristics, the traditional towns were classified into four categories: ecological traditional towns (n = 47), waterfront traditional towns (n = 21), commercial traditional towns (n = 28), and religious cultural traditional towns (n = 18) (Fig. 3). During data preprocessing, invalid and duplicate reviews were removed. As a result, the study obtained 131,596 valid text reviews and 382,026 images uploaded by tourists. These data are used for subsequent multimodal data fusion and SHAP-based interpretability analysis to quantify the impact of landscape features in different types of traditional towns on tourist perceptions.

Data Preprocessing

To ensure data standardization and usability, the text and image data collected from Ctrip must undergo preprocessing. In the text preprocessing stage, reviews with fewer than 10 characters are removed to filter out invalid data. The text content is cleaned by removing HTML tags and special characters. During word segmentation, the Jieba segmentation tool is used to split the text23. Stop words and punctuation are removed to reduce noise and improve data quality24. In the image preprocessing stage, all images are resized to 640×640 pixels to ensure uniform dimensions. Random horizontal flipping and a maximum 10-degree rotation are applied for data augmentation25,26. This enhances data diversity and improves model generalization. A hash algorithm is used to detect and remove duplicate images, preventing data redundancy27.

Definition of the traditional town tagging system

This subsection establishes a two-level tagging system for traditional towns based on their landscape characteristics, cultural background, and tourist experiences. The first-level tags include environment, infrastructure, and experience. The second-level tags are categorized as follows: environment includes water, vegetation, and topography; infrastructure includes architecture and accessibility; and experience includes aesthetics, comfort, culture, and consumption.

Text sentiment analysis

This study uses the BosonNLP tool to conduct sentiment analysis on tourists’ text reviews, extracting continuous sentiment scores ranging from 0 to 128. Higher values indicate more positive emotions, while lower values indicate more negative emotions. To facilitate subsequent modeling and interpretation, the sentiment scores are divided into three intervals: positive sentiment (0.8–1.0), neutral sentiment (0.4–0.8), and negative sentiment (0–0.4), which characterize the emotional tendencies expressed by tourists in their reviews.

Sentiment scores, together with the ratings actively given by tourists on the platform, constitute the satisfaction evaluation system in this study. The rating represents an explicit judgment of the overall experience by tourists and is a discrete variable ranging from 1 to 5. The sentiment score is derived from the naturally expressed emotions in the review text and, as a continuous variable, offers greater granularity in capturing nuanced differences. The sentiment score originates from the naturally expressed emotions in the review text and, as a continuous variable, provides finer granularity.

The inclusion of sentiment scores helps to identify potential discrepancies between ratings and emotions. For example, some reviews have high ratings but express negative emotions in the text, while others have low ratings but use positive language. Such discrepancies between ratings and sentiment provide a methodological basis for analyzing perception gaps and identifying key influencing factors in subsequent analysis. They also offer targeted supplementary information for the optimization of cultural landscape spaces.

Text label mapping

To extract key information from tourist reviews, this section applies a label mapping method. Text reviews are matched with the predefined tagging system for traditional towns, and label vectors are computed. The label vector serves as a key input for the subsequent SHAP analysis. It quantifies the features that tourists focus on. This section employs the SentenceTransformer model for label mapping29. Text reviews are converted into embedding vectors, and their similarity to label text is calculated to determine the relevant feature categories.

(1) Embedding representation of text and labels

The goal of text embedding is to convert textual information into fixed-dimensional vector representations. This captures semantic features, enabling similarity computation in the vector space. This section uses SentenceTransformer for text vectorization. Each review and label text is mapped into the same high-dimensional space to facilitate label matching30.

Let the embedding representation of the review text be \({V}_{c}\) and the embedding representation of the label text be \({V}_{t}\). The embedding calculation formula is as follows:

$${V}_{c}=f({T}_{c}),\,{V}_{t}=f({T}_{t})$$

(1)

\(f(\cdot )\) represents the encoding function of SentenceTransformer, while \({T}_{c}\) and \({T}_{t}\) correspond to the input review text and label text, respectively.

(2) Similarity calculation

The core of label mapping is to calculate the semantic similarity between review text and label text in the embedding space. This section uses cosine similarity for calculation. This method measures text similarity based on the angle between vectors. Its value ranges from -1 to 1. A value closer to 1 indicates a higher semantic similarity between the text and the label31. The calculation formula is as follows:

$$S({T}_{c},{T}_{t})=\frac{{V}_{c}\cdot {V}_{t}}{\parallel {V}_{c}\parallel \parallel {V}_{t}\parallel }$$

(2)

\({V}_{c}\cdot {V}_{t}\) represents the dot product of the embedding vectors of the review text and the label text. \(\parallel {V}_{c}\parallel\) and \(\parallel {V}_{t}\parallel\) are the vector norms of the review text and label text, respectively. To ensure the similarity values range from 0 to 1 for better interpretability, the results are normalized using the following transformation formula:

$${S}^{{\prime} }=\frac{S+1}{2}$$

(3)

(3) Label matching

After calculating similarity, a threshold needs to be set for label matching32. This ensures that the extracted labels are highly relevant to the review content. This study refers to previous research33,34,35 and selects 0.6 as the similarity threshold. This value is chosen by balancing precision and recall.

When the similarity between a review text and a label is 0.6 or higher, the label is considered relevant to the review. Each review is ultimately mapped to a label vector. Each element in the vector corresponds to a label. If a label matches the review, it is assigned a value of 1; otherwise, it is assigned 0.

Image label mapping

To systematically identify image content and assign semantic labels to each traditional town image, this section employs the CLIP (Contrastive Language-Image Pre-training) model36. This enables semantic mapping between traditional town images and the predefined tagging system32. By calculating the semantic similarity between images and label texts, the correspondence between images and labels is determined.

Image embedding generation: The image encoder in the CLIP model extracts high-dimensional features from each input image \(P\), generating the image embedding vector \({V}_{p}\). To facilitate similarity calculation, the vector is normalized to ensure a unit length constraint:

$${V}_{p}^{{\prime} }=\frac{{V}_{p}}{\parallel {V}_{p}\parallel }$$

(4)

Label embedding generation: The CLIP model’s text encoder converts predefined second-level label texts into high-dimensional embedding vectors. These vectors are then normalized to ensure scale consistency, facilitating subsequent similarity calculations.

Similarity calculation: Cosine similarity is used to measure the similarity between each image embedding vector and all label embedding vectors. This determines the semantic relevance between images and label texts. The similarity calculation follows the same method as described in the section “Text Label Mapping”, resulting in a set of assigned labels \({L}_{p}\).

Multimodal label integration

This subsection employs a multimodal fusion method to integrate the label information from text and images, enhancing the representation of traditional town features. Text and images each have their strengths in presenting information. Text reflects tourists’ subjective experiences, whereas images convey intuitive visual features. Single modalities may be prone to information loss or bias. Therefore, integrating labels from both text and images improves data completeness and enhances model interpretability37.

The calculation method for integrating labels is as follows:

$${L}_{f}={L}_{c}\vee {L}_{p}$$

(5)

\({L}_{c}\) and \({L}_{p}\) represent the label values for text and images, respectively, and \({L}_{f}\) is the final fused label.

Feature importance analysis based on SHAP

This study uses the SHAP method to analyze the influence of different features on tourist ratings and sentiment scores. SHAP is model-agnostic and offers sample-level interpretability, enabling it to reveal feature influences on predictions at both global and individual levels.

The input data in this study consists of multimodal features combining text and images. Tourist perception outcomes may involve nonlinearity and interaction effects, making traditional linear regression limited in both modeling capability and interpretability. Therefore, we use XGBoost to build the prediction model and apply the SHAP method to interpret its outputs. This method provides both global feature ranking and sample-level explanations, making it particularly suitable for exploring perceptual differences among tourists across various types of traditional towns in this study.

In terms of model selection, this study uses XGBoost for regression analysis. XGBoost is widely used in large-scale, multidimensional data analysis due to its strong nonlinear modeling capabilities, excellent overfitting resistance, and robustness to missing values. Especially in social media data analysis scenarios, XGBoost can effectively handle text, image, and fused features, improving prediction accuracy and stability. This section first trains the model using XGBoost and then uses the SHAP method to calculate the contribution of text, image, and fused features to the prediction of tourist ratings and sentiment values.

The SHAP value calculation for feature \(i\) is as follows38:

$${\psi }_{i}=\sum _{{\rm{T}}\subseteq {\rm{M}},\,\{i\}}\frac{|{\rm{T}}|!(|{\rm{M}}|-|\text{T}|-1)!}{|{\rm{M}}|!}[g({\rm{T}}\cup \{i\})-g({\rm{T}})]$$

(6)

\({\rm{M}}\) is the set of all features, \({\rm{T}}\) is the subset of features excluding feature \(i\), and \(g({\rm{T}})\) represents the model prediction value using only the feature set \({\rm{T}}\). This formula takes into account the influence of the feature in different combinations, ensuring the rationality and interpretability of feature importance.