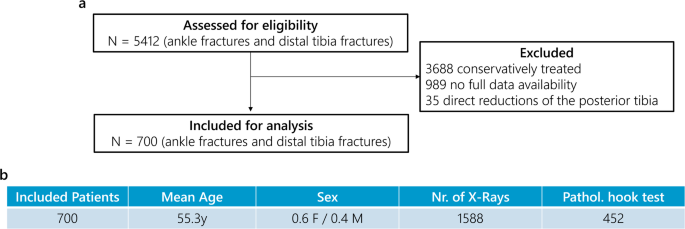

Dataset

Over the past five years (2019-2024) 5412, serial patients maintaining ankle fractures could be identified in the University Hospital Clinical Information System (Level I Trauma Center).

Given the retrospective and exploratory design of this study, no a priori sample size estimate or statistical power analysis was performed. Cohort sizes are determined by the availability of existing clinical data, and all eligible cases are included to maximize the analytical width while acknowledging potential limitations of generalization.

5,412 patients received surgical treatment in 1724, but in 735, hook examinations were clearly recorded in surgical reports with signs of stability or instability. Of the 989 excluded cases, 622 had a lack of preoperative radiographs as imaging was performed externally. 110 patients lacked AP (anterior and posterior) or lateral views. Ground truth was set up as a written document for stable or unstable hook tests. In 257 patients, surgical reports did not record hook tests inadequately. Patients without explicitly stated hook tests were excluded even when treatment outcomes for unstable syndesism, such as positioning screws, were present. Patients who only had hook test radiography without explicitly mentioning the stability of the surgical report were excluded. Patients who had a hook examination prior to full fracture fixation were excluded. Thirty-five patients were excluded due to a direct reduction in posterior marleurus and therefore had a potential bias in traditional variants of hook tests. A total of 10 senior surgeons who were trained in trauma surgery specialisedly performed intraoperative hook tests as recorded in the surgical report. No retrospective quantitative evaluation of the hook test was performed.

Of the 700 patients included, 1588 preoperative radiographs were included in the study assessment. This discrepancy in the number of patients in the number of radiographs is due to the inclusion of pre- and post-deposition imaging and immobilization imaging. The 1588 digitalized X-rays were anonymized and exported from the Internal Image Archive and Communication System (PACS) as .dicom format. Data export and anonymization was carried out in May 2023 through the Radiation Institute's accreditation pipeline in compliance with the Bavarian Hospital Act (BayKRG) and the General Data Protection Regulation (GDPR) with approval from the Local Ethics Committee (23–173 – BR). The image acquisition protocol included the following parameters: 80% of the radiography were obtained using the AGFA system (model: 3543EZE, DXD30_Wireless) and 20% were obtained using the Siemens Healthineers Systems (model: YSIO X.Pree). The average tube voltage was 58.1 kV (range: 51.8-69.8 kV), the average tube current was 466.8 Ma, and the detector distance from the average source was 1150.0 mm. These radiographs were automatically matched with the surgical report to check for the presence of an intraoperative syndesmosis stability check through one investigator. Depending on the intraoperative stability test, fractures were assigned to the corresponding group: syndesmrsis is stable and syndesmia is unstable. In the same step, AO classification labels were excluded from surgical reporting, and later simultaneous classification stability and AO classification were performed. Research flow charts and demographic data are shown in Figure 1 and Table 1.

Patient inclusion flow chart.

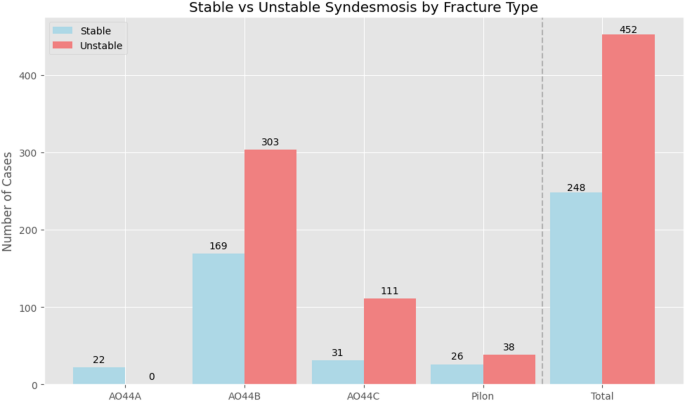

The distribution of AO classification results is shown in Figure 2. In all AO44A fracture subanalysis, syndesiasis is considered stable, AO44B fracture 169 is considered stable, and there is AO44C Fractures fractures fractures fractures fractures fractures fractures fractures fractures fractures fractures fractures fractures fractures fractures 311 stable/dist fractures (21% fractable/dist fractures (35% stable). Stable 38 (40% stable). The split of 80-10-10 train inspection tests was utilized in most deep learning studies as well as the standard for small datasets.18 (Table 1). The partitions were split apart at the patient level. Data were randomly divided based on AO44 subgroup frequencies and assigned to training, validation, and test sets by a random number generator using numpy.

Class distribution of instability in syndesiasis by AO classification data. Each number represents an individual patient.

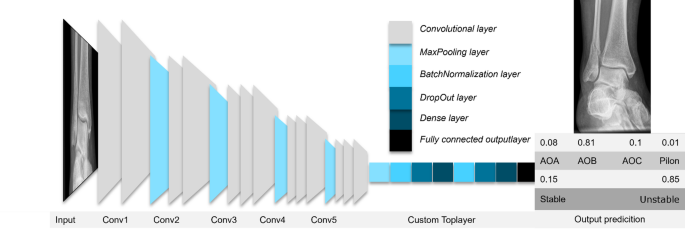

Deep Learning Network Architecture and Data Pipeline

The implementation of the CNN architecture was done in Python and open source package Tensorflow219. Various state-of-the-art deep learning networks were applied to the dataset and were investigated with pre-suppressed weights such as VGG16, EfficientNet, NasNetMobile, and ResNet.20, 21, 22. In the early stages of model development, several established convolutional neural network architectures on randomly generated subsets of datasets were systematically trained and evaluated to identify the architectures that achieved the best diagnostic performance for a particular classification task. The basic network architecture is preserved and the top layer (batch normalized)twenty three,dropout,maxpool) was adapted to minimize overfitting according to current recommendations (Fig. 3)17. The model is initialized with a forwarding learning approach using image net pre-trained weighttwenty four. Network freeze was used during the early training stage to preserve pre-secured features during transfer learning.twenty four.

Sample Network (VGG16) and custom TopLayer configuration.

Image preparation is at the heart of deep learning network applications. Each image was normalized and expanded using a randomized combination of geometric transformations (shear, elasticity), texture/edge changes (blurred, sharpened, embossed, edge detection), stochastic perturbations (dropouts), and color distortions (contrast, brightness, permutations of feathers). The application was done with a custom image generator, so no additional storage for images was required. Random enhancement at each training epoch maximized training efficiency. Additional improvements in network domain adaptation and robustness have been demonstrated in information studies17. As seen in Figure 2, to combat the imbalances of classes present in the dataset, we implement weighting classes to adjust the loss function, assign high weights to underestimated classes, prioritize correct classifications to the model, and force balance the effects during training.

Training was applied with the following optimizations: Periodic Learning Rate (CLR)twenty five Dynamically adjust the learning rate to escape local minimums, as demonstrated in the classification of epilepsy histopathology (1e-7 1e-3)Stable training and verification accuracy26. If verification loss did not improve at 10am, an early suspension was applied27Therefore, stopping training when the validation metric meets the plateau will prevent overfitting. Training was performed with a batch size of 128 and an Adam Optimizer.

Performance evaluation

To validate model performance, the dataset was split into 10 layered folds that balance the instability of baseline syndesmut techniques across the subset. One crease was reserved for each iteration as a validation set, and the others were used because the training set was used using a random allocation of cases for each fold. Performance metrics include accuracy, loss, sensitivity, specificity, accuracy, and F1 score.

To assess the performance of the algorithm on the test set, the invisible test set was suppressed and normalized only as preprocessing. The test set assembled 70 randomly selected cases not connected to the training and validation set. Randomization was obtained by balanced numpy random number generation across all ao-subclasses. The test set consisted of two AO44-A cases, 48 AO44-B cases, 14 AO44-C cases, and six Piron cases. We then predicted the AP (front and back) and lateral images for each case and calculated the average probability (p = (p)AP+ pRat)/2)>0.5 threshold for individual patient prediction. Classification results were assessed by accuracy, sensitivity, specificity, accuracy, F1 score, and confidence intervals.

Visualization

Guided score-weighted class activation mapping (GSCAM) improves the explainability of the diagnosis in the assessment of instability of syndesiasis by integrating clip-derived attentional mechanisms with guided backpropagation and clinically important imaging biomarkers.28. It is different from gradient-dependent methods such as Grad-Cam29,This technique utilizes trust scores from target class activation to generate spatial attention maps, reducing noise and maintaining high-resolution anatomical details important to detect subtle ligament damage.

Hardware

I implemented the approach on a local server running Ubuntu using one Nvidia GeForce GTX 3080 TI, Intel CPU I7 12700, 64 GB RAM, CUDA 11.7, and CUDNN 8.0.