From robotic arms that learn to pick up new objects in real time, to models that transform 2D video into 3D virtual reality, to curious chatbots that adapt to users, to machine learning techniques to decipher the brain (and much more), the 2025 edition of our annual Research Showcase and Open House highlighted the breadth and depth of computing innovation coming out of the Allen School.

Last week, nearly 400 industry partners, alumni and friends gathered on the University of Washington campus to learn how the school is working to solve a series of “big challenges” that benefit society. The Allen School is uniquely positioned to lead in its field. Participate in technical sessions covering a wide range of topics, from artificial intelligence to sustainable technology. Also, during the luncheon keynote, researchers will explore how they are leveraging machine learning to decipher causality, learning, and communication in the brain. Also, during evening poster sessions and open houses, you can learn directly from student researchers themselves about more than 80 research projects, including those who have won the coveted Madrona Venture Award and the People’s Choice Award.

Unraveling the mysteries of brain learning with the help of machine learning

Allen School professor Matt Golub shared recent research from the Systems Neuroscience and AI Institute (SNAIL) in his luncheon keynote address. The research uses machine learning techniques to better understand how the brain performs calculations such as decision-making and learning, an insight that could inform future advances in AI. Previous research has focused on reconstructing neuronal connections in vitro, or outside the body, by looking at thin sections of the brain under a microscope. Instead, Golub and his team analyze active neural activity.

“Solving these questions will inform future studies of how different brain networks perform distributed computations through their connections, and the dynamics of activity that flows through those connections,” Golub said. “Then we can study how connections change as the brain learns. We may discover the learning rules that govern synaptic plasticity.”

Golub and his collaborators introduced a new approach to analyzing neural population dynamics using optogenetics and computational modeling. The researchers used lasers to target neurons and stimulate their activity. If one neuron was irradiated with a laser and another neuron reliably fired, a connection between the two was inferred. These experiments are resource-intensive, so the researchers designed active learning algorithms to select these stimulus patterns, or select specific neurons to target with the laser, so that they could learn an accurate model of the neural network with as little data as possible.

Zooming out for a higher-level view, Golub and his team also developed a new machine learning method that can identify communications between all regions of the brain. The technique, called multiregion latent factor analysis with dynamical systems (MR-LFADS), uses neural activity data from multiple regions to uncover how different parts of the brain interact with each other. Unlike existing approaches, MR-LFADS also observes what unobserved brain regions are saying. The team’s custom deep learning architecture can detect when recorded regions reflect unobserved effects. When applied to real neural recordings, the researchers found that MR-LFADS could predict how disruption in one brain region would affect other brain regions. This was an effect never seen before with this system.

Golub said he would now be thinking about “the nexus between these two fronts.” In particular, he is interested in advances in active learning in nonlinear models and other deep learning models to help generate AI-guided causal operations. This is an experiment in which the model can tell researchers what data needs to be improved.

“All of this is aimed at trying to understand how our brains compute and learn to drive flexible behavior. That progress can and has inspired innovations in AI systems and new approaches to improving the health and function of our brains,” Golub said.

“There’s a lot of amazing research out there.”

The event culminated with an evening poster session and the presentation of the Madrona Prize. The Madrona Prize is an annual tradition by the Madrona Venture Group to recognize innovative student research with commercial potential. Past winners have raised hundreds of millions of dollars in venture capital and founded companies that have been acquired by technology giants like Google and NVIDIA.

Sabrina Albert, a partner in the company, presented the 2025 awards to the winner and two runners-up.

“There’s so much great research that you’re all working on that it was very difficult to choose which is the most exciting,” Albert said.

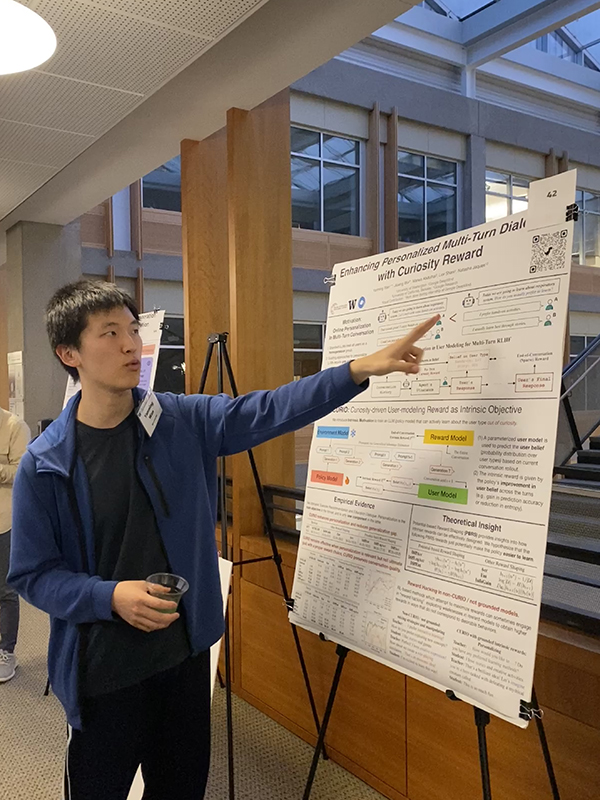

Madrona Prize Winner / Powering Personalized Multi-Turn Interactions with Curiosity Rewards

Allen School Ph.D. student Yanming Wan won the grand prize for CURIO (Curiosity-Driven User Modeling Rewards as an Intrinsic Goal). This new framework enhances the personalization of large-scale language models (LLMs) in multi-turn conversations.

Conversational agents need to be able to adapt not only to diverse domains such as education and healthcare, but also to the preferences and personalities of different users. However, traditional methods for training LLMs require pre-collected user data, often struggle with personalization, and are less effective for new users or those with limited content.

To solve this problem, Wang and his team introduced an intrinsic motivation reward model that allows the LLM to actively learn about the user out of curiosity and adapt to the user’s personal preferences during the conversation.

“We propose to leverage user models to incorporate curiosity-based intrinsic rewards into reinforcement learning from multi-turn human feedback (RLHF),” Wang said. “This new reward mechanism allows LLM agents to proactively infer user characteristics by optimizing conversations and improving the beliefs of the user model. As a result, the agent learns more about the user across turns, enabling more personalized interactions.”

Additional members of the research team include Allen School Professor Natasha Jacks, Google DeepMind’s Jiaxing Wu, Google Research’s Lior Shani, and Marwa Abdulhai, Ph.D. student at the University of California, Berkeley.

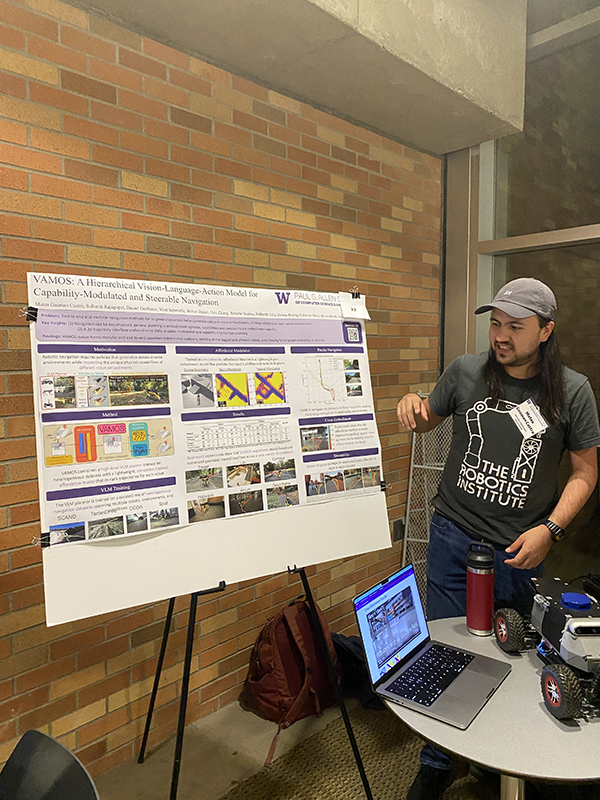

Madrona Prize Runner-up / VAMOS: Hierarchical Visual-Language-Behavior Model for Capacity Modulation and Steerable Navigation

Another research team won an award for VAMOS, a hierarchical vision-language-action (VLA) model that helps robots navigate between diverse environments. In both real indoor and outdoor navigation courses, VAMOS was three times more successful compared to other state-of-the-art models.

“Contrary to the idea that simply scaling up data improves generalization, we found that mixing data from many robots and environments can actually degrade performance, because not all robots can perform the same movements or handle the same terrain,” said Dr. Allen School. Student Mateo Guaman Castro accepted the award on behalf of his team. “Our system, VAMOS, tackles this problem by decomposing navigation into hierarchies. A high-level VLM planner suggests multiple possible paths, and a robot-specific ‘affordance modulator’ safely trained in simulation selects the path that best suits the robot’s physical capabilities.”

The VAMOS research team also includes Allen School professors Byron Boots and Abhishek Gupta. PhD students Rohan Baijal, Rosario Scalise, and Sidharth Talia. undergraduates Sidharth Rajagopal and Daniel Gorbatov; Alumni Matt Schmitl (PhD, 25 years), currently at OverlandAI, and Okti Chan (BS, 25 years), currently at NVIDIA. Celso de Mello of the U.S. Army DEVCOM Laboratory. and Emma Romig, director of the Allen School’s Robotics Lab.

Madrona Prize runner-up / Dynamic 6DOF VR reconstruction from monocular video

Allen School researchers were also recognized for their work transforming two-dimensional videos into dynamic three-dimensional scenes that users can experience in immersive virtual reality (VR), revealing additional depth, movement, and perspective.

“The key challenge is understanding the 3D world from a single planar video,” says Dr. Student Babak Elmy received the award. “To address this, we first use AI models to ‘imagine’ what a scene will look like from different angles. We then use new techniques to model the movement of the entire scene while fine-tuning the details of each frame. This combined approach allows us to handle fast-moving action, moving us towards reliving 2D videos and historical moments as dynamic 3D experiences.”

Elmieh developed the project in collaboration with Allen School professors Steve Seitz, Ira Kemelmacher-Shlizerman, and Brian Curless.

People’s Choice Award / Mormo Act

The evening concluded with the presentation of the People’s Choice Award, where attendees voted for their favorite posters and demos.

Shwetak Patel, director of development and entrepreneurship at the Allen School and Washington Research Foundation Entrepreneurship Endowed Professor in Computer Science and Engineering and Electrical and Computer Engineering (ECE), presented Dr. Allen School with the 2025 award. student Jiafei Duan and UW Department of Applied Mathematics Ph.D. student Shirui Chen contributed to MolmoAct. MolmoAct is an open-source behavioral inference model developed by a team of researchers at the University of Wisconsin and the Allen Institute for AI (Ai2) that allows robots to interpret and understand instructions, sense the environment, generate spatial plans, and execute them as goal-directed trajectories.

“MolmoAct is the first fully open behavioral inference model for robotics. Our goal is to build generalist robotic agents that can reason before they act. This paradigm has already influenced subsequent waves of research,” said Duan, who worked on the project as a graduate student researcher at Ai2.

The researchers invited participants to test MolmoAct’s reasoning abilities by placing an object, such as a pen or a tube of lip gloss, in front of the robot arm and watching the robot learn to pick it up in real time.

The team also includes UW Allen School professors Ali Farhadi, Dieter Fox and Ranjay Krishna. PhD students Jieyu Zhang and Yi Ru Wang. master’s student Jason Lee; undergraduate students Haoquan Fang, Boyang Li, and Bohan Fang; Shuo Liu, an ECE master’s student, and Angelica Wu, who recently joined the Department of Industrial Systems Engineering. Mr. Farhadi is CEO of Ai2, and Mr. Fox and Mr. Krishna also spend some of their time leading research initiatives. The team also includes Ai2’s Yuquan Deng (BS, ’24), Sangho Lee, Winson Han, Wilbert Pumacay, Rose Hendrix, Karen Farley, and Eli VanderBilt.

For more information on the Allen School’s 2025 Research Showcase and Open House, see GeekWire’s article here and the Madrona Prize announcement here.