Combining AI with edge computing can be complicated: the edge is a place where resource costs must be controlled, and where other IT optimization initiatives, such as cloud computing, are difficult to apply.

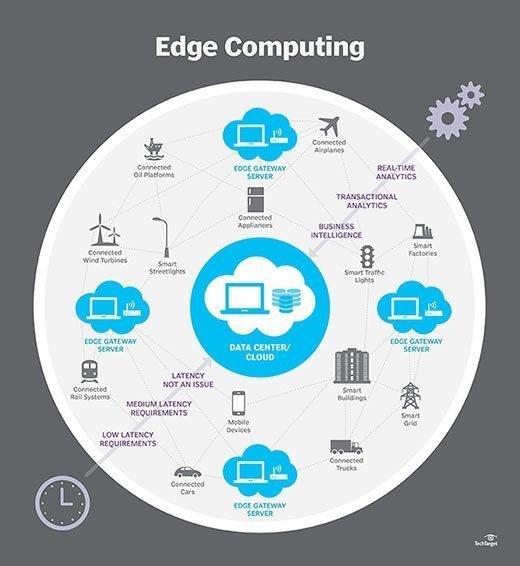

Edge computing is an application deployment model in which some or all of an application is hosted close to the real-world systems it is designed to support. These applications are often referred to as real-time and IoT because they interact directly with real-world elements such as sensors and effectors and require high reliability and low latency.

The edge is typically on-premise, close to users and processes, and often on a small server with limited system software and performance. This local edge is often linked to other application components running in the cloud.

As AI grows in power and complexity, opportunities for edge deployment scenarios increase. Deploying AI in edge computing environments offers a wide range of benefits across industries, but successful implementation requires specific capability and platform considerations.

The Benefits of AI in Edge Computing

AI deployed in edge computing environments (also known as edge AI) offers many benefits.

For edge applications that process events and return commands to effector devices or messages to users, Edge AI enables better and more flexible decision-making than simple edge software alone can provide. This can include applications that correlate events from single or multiple sources before generating a response, or require complex analysis of event content.

Other benefits of AI in edge computing environments include:

- Speed has improved.

- Stronger privacy.

- Improved application performance.

- Reduced latency and costs.

Consideration of AI in edge computing

When deploying AI for real-time edge computing, organizations must address two key technical constraints: hosting requirements and edge system capabilities, and latency budgets.

Hosting Requirements

Most machine learning tools can be run on server configurations that are suitable for edge deployment because they don't require banks of GPUs, and researchers are increasingly developing less resource-intensive versions of more complex AI tools, such as the large language models popularized by generative AI services, that can run on local edge servers, provided the system software is compatible.

If the required AI capabilities are not available in a form suitable for local edge server deployment, events may be able to be passed to the cloud or data center for processing, as long as the application's latency budget is met.

Latency Budget

The latency budget is the maximum time your application can tolerate between receiving an event that triggers processing and responding to the actual system that generated that event. This budget must cover the transmission time plus all processing time.

A latency budget can be a soft constraint that, if not met, delays an activity, such as an application that reads a vehicle's RFID tag or manifest barcode and routes the vehicle for unloading. Or it can be a hard constraint that, if not met, can lead to catastrophic failure, such as dumping dry materials onto a moving train car or merging with high-speed traffic.

When to bring AI to the edge?

Deciding when to host AI at the edge requires balancing the available compute power at a particular point, the round-trip latency between that point and the triggering event source, and the response destination. The higher the latency budget, the more flexibility you have for placing your AI processes, and the more power you can bring to your application.

While some IoT systems process events individually, complex event correlation is useful in other applications, for example in traffic control, where optimal commands depend on events from multiple sources such as traffic sensors.

Event content analysis is also crucial in healthcare: For example, AI can analyze blood pressure, pulse, and respiration and raise an alarm if the current readings, trends in readings, or the relationship between different health indicators occurring simultaneously indicate that a patient is at risk.

If your latency budget allows, you can also access databases stored locally, in the cloud, or in a data center. For example, a delivery truck can use RFID to get a copy of the manifest and use its contents to guide the truck to a bay, dispatch workers to unload the truck, and generate instructions for handling the cargo.

Even when AI is not hosted in the cloud or a datacenter, edge applications often generate traditional transactions from events, in addition to local processing and turnaround. Organizations need to consider the relationship between edge hosting, AI, and transaction-oriented processing when planning for edge AI.

Choosing an Edge AI Platform

A key consideration when choosing an edge AI platform is how it will be integrated and managed. For edge AI that is loosely linked with the cloud or data center, purpose-built platforms such as Nvidia EGX are optimized for both low latency and AI. For edge AI that is tightly coupled with other application components in the cloud or data center, real-time Linux variants are easier to integrate and manage.

If a public cloud provider offers an edge component (such as AWS IoT Greengrass or Microsoft's Azure IoT Edge), it is possible to split AI capabilities across edge, cloud, and data center. This approach streamlines the selection of AI tools for edge hosting, making it easy for organizations to choose AI tools that are included in the edge package when available.

Most edge AI hosting will likely use simpler machine learning models that are less resource intensive and can be trained to handle most event processing. Deep learning forms of AI require more hosting power, but depending on the model complexity, edge server hosting may be practical. LLM and other generative AI models are easier to distribute to the edge, but currently may require cloud or data center hosting for full implementation.

Finally, consider managing edge resources used with AI. While AI itself does not require different management approaches compared to other forms of edge computing, choosing a platform dedicated to edge and AI may require different management approaches and tools.

Tom Nolle is founder and principal analyst at Andover Intel, a consulting and analytics firm that looks at evolving technologies and applications first from the perspective of buyers and their needs. With a background as a programmer, software architect, and software and network product manager, Nolle has provided consulting services and technology analysis for decades.