The BacDive database currently (2024.2 release) comprises more than 2.7 million data points covering more than 1000 data fields23. However, data availability varies markedly for the different traits and different taxa. In addition, genome sequences are not yet available for all strains in the database. Therefore, we selected traits for machine learning and downstream modeling if the corresponding genome sequences and the states of traits were available for enough strains. For this study we only selected traits with available data for more than 3000 strains. However, as Random Forest is a robust algorithm that can handle a large quantity of features such as protein families24, it might be possible to lower the threshold to 500–1000 strains per trait in the future to predict traits that are less available in the literature.

Only a limited number of the selected traits, such as the response to Gram-staining, could be classified into two states (i.e., Gram-positive and Gram-negative, ignoring the small number of Gram-variable strains). In contrast, a considerable fraction of prokaryotic traits can assume more than two states as is the case for oxygen tolerance, or are even continuous, as the temperature dependence of growth. We therefore devised and tested approaches that render data from prokaryotic traits with multiple states amenable to machine learning. In parallel, we assessed the effects of an uneven distribution of data points among the different trait states on the quality of predictions (determined by different metrics). From the models obtained, the importance of individual features (i.e., protein families) was deduced to arrive at a biological interpretation of the machine learning model.

Evaluation and justification of protein annotation methods and modeling approaches

In this study, we chose protein family annotations of the Pfam database as the basis for our machine learning approach after carefully evaluating alternative genomic annotation methods. Pfam is widely regarded as a comprehensive and reliable source for annotating protein domains and families due to its extensive coverage, clearly defined domain boundaries, and frequent updates25. Furthermore Pfam has a much higher mean annotation coverage (80%25) than Prokka (52%4). We benchmarked Pfam annotations against other annotation tools, including eggNOG, and other tools of InterPro such as SMART, PRINTS, SUPERFAMILY, and CDD26,27. While eggNOG provided equally strong predictive performance, it required significantly longer computational runtimes and produced an excessively high number of distinct functional categories—a test annotation with 3000 bacterial genomes generated more than 2 million distinct ENOG orthologous groups, complicating downstream analysis. Tools such as SMART and PRINTS were limited by their relatively small number of classes, negatively affecting model performance. Conversely, SUPERFAMILY annotations were overly condensed, merging many distinct functional classes and thereby reducing the discriminative power of the resulting models. Although CDD28 represented a viable alternative, we selected Pfam due to its optimal balance between granularity and interpretability, alongside its established usage in the literature.

We also considered modern transformer-based genomic sequence models, which have shown promising results in numerous genomic prediction tasks and are mostly applied to human genomes so far29,30. Despite their potential, these models are particularly sensitive to subtle, potentially irrelevant genomic variations such as single nucleotide polymorphisms (SNPs) or non-coding regions. Recent literature has highlighted that transformer models, due to their highly parameterized nature and their capability to capture fine-grained sequence patterns, can inadvertently develop strong taxonomic biases and are furthermore challenging to interpret21. They also need significant computational power and a substantial amount of training data which cannot be provided for many traits31,32. Although transformer-based methods hold considerable promise for genomic analyses, we chose a feature-based machine learning approach utilizing Pfam annotations, not only to mitigate possible biases but also to allow for clearer biological interpretability, which was very important to the conclusions of this study.

Beyond binary states of traits: predicting oxygen requirements

With regard to oxygen requirements, prokaryotic strains are typically classified as anaerobes, facultative anaerobes, aerobes, aerotolerant, or microaerophilic species, depending on the amount of oxygen required or tolerated by the organism. For such traits with non-binary states, a multi-label classification model could in principle be built and trained to distinguish between each of these classes. However, this would only yield reasonable results if the data points were rather evenly distributed between the multiple classes and if individual classes could be well discriminated from each other. Both conditions are not met for data describing the oxygen demand of a prokaryotic strain. For instance, contradictory data from literature are apparent in the BacDive database, where 7.1% of the strains have more than one state listed for their oxygen requirement (Fig. 2A; data points linked by horizontal lines).

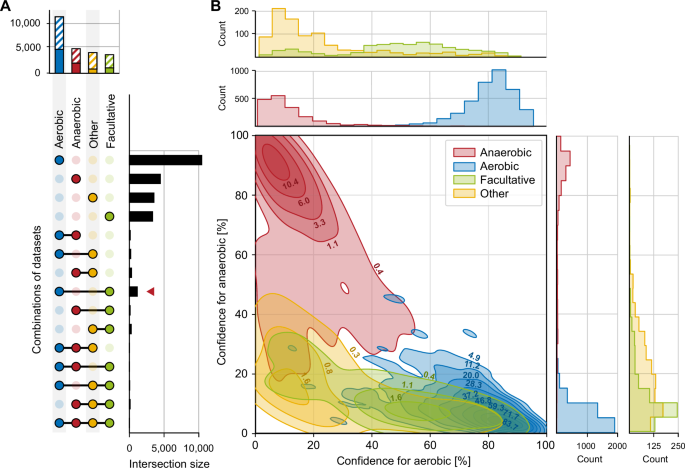

A Overview on availability and overlap of trait states for oxygen requirements of strains in the BacDive database. Obligate aerobes and obligate anaerobes were grouped with aerobes (blue) and anaerobes (red), respectively. Since aerotolerant and microaerotolerant strains had only 26 data points, they were grouped together with microaerophiles in the category ‘other´ (yellow). Facultative aerobes and facultative anaerobes were also grouped together (green). The chart on top is a summary of all data available for one state of the trait. Hatched areas represent strains that had no genome sequences available and could not be used for predictions. On the right-hand side, the sizes of overlapping datasets are shown. The red arrowhead indicates the highest amount of overlapping data for aerobic and facultative anaerobic labels. B 2D kernel density plot and histograms of the distribution of confidence values across the full dataset. In the 2D kernel density plot, confidence values of the AEROBE model of single strains are shown on the x-axis while the confidence values of the ANAEROBE model are shown on the y-axis. The isopleths delineate all combinations of the two confidence values that were determined for the same count of strains. Isopleths are labeled with the density of data points that lie within the respective area (points per percent square). Colors represent the true state of the respective trait in these strains. Red, anaerobic; blue aerobic; green, facultative; yellow, ‘other´ (microaerophilic and aerotolerant).

To meet this challenge, two separate models for oxygen tolerance were trained: one for aerobic (AEROBE) and one for anaerobic lifestyle (ANAEROBE). Intermediate cases, i.e. facultatively anaerobic or aerobic, or microaerophilic, were treated as negative while ambiguous datasets were removed from the training and test set. The resulting predictions can be expressed as a vector with 1 for positive and 0 for negative for both models. The underlying assumption is that the models correctly identify strains that are either aerobes [1 0] or anaerobes [0 1] with high confidence, while failing to predict facultative anaerobes and microaerophiles. This failure can be expressed as [0 0] (both models negative), where the assumption is that they are either facultative anaerobe or others. The cases where both models predict positive [1 1] serve as a control for the quality of the models, since one of them must be wrong.

For the test dataset, the AEROBE model performed well in predicting aerobic strains with a precision of 97.0%. However, the recall was remarkably lower with only 87.9% of the truly aerobic strains found among the test data, hence the number of false negatives was higher than that of false positives. This yielded an F1 score of 92.3. On the opposite, the ANAEROBE model had a lower precision of 88.7% while almost all true anaerobes were identified with a recall of 98.2%.

To evaluate how well the two models separate the two trait states, the confidences of the AEROBE model were compared to the confidences of the ANAEROBE model (Fig. 2B). The 2D kernel density plot revealed that the two models successfully determined either true positives of aerobes or of anaerobes with marginal overlap. In fact, only one single test strain was simultaneously predicted to be positive by the AEROBE and the ANAEROBE model (with a confidence of 61% and 54%, respectively). Accordingly, the application of the two models allowed to clearly distinguish between anaerobes and aerobes and we set the threshold for a positive prediction for both models to a confidence level of 50%. As anticipated, there was a larger group of 2,424 strains that were predicted as negative in both models.

Next, the ability of the two models to discriminate the other states of the trait oxygen requirement was evaluated. Based on the little overlap between isopleths for true anaerobes on one hand and those for facultatively anaerobic, and microaerophilic or aerotolerant strains on the other hand in the 2D kernel density plot, the ANAEROBE model was able to distinguish most anaerobes from bacteria in all the other classes. The AEROBE model identified all true aerobes with high confidence and excluded the microaerophilic and aerotolerant strains (orange isopleths and histograms in Fig. 2B), but it failed in many cases to exclude facultative anaerobic strains. This result is reasonable since facultative anaerobes can grow without oxygen but use it for growth if present, and therefore are expected to harbor most of the protein families involved in oxygen metabolism that are also present in aerobes.

Indeed, a subsequent analysis of the feature (i.e. Pfam) importances that underlie the decision of the respective model demonstrated that the models were consistent with biochemical characteristics of the strains (Table 1). Remarkably, the predictions of the ANAEROBE model were mostly based on the absence of protein families while the AEROBE model was mostly based on their presence. The highest impact on the ANAEROBE model was determined for the absence of protein families of the oxidative decarboxylation (PF00198, PF16870, PF00676, PF00115), and oxygenases (PF01494, PF00296), as well as for the presence of protein domains related to oxidative stress tolerance (PF02915). Interestingly, the highest Gini importance was determined for the presence of the protein family of Prismane/CO dehydrogenases, whose biological role has not yet been fully understood (PF0306333). Our results thus point to a role of this protein family in obligately anaerobic prokaryotes. By comparison, the AEROBE model was based on the presence of proteins from the aerobic respiration, e.g. terminal oxidases (PF00115). Overall, these findings are mostly in line with established biochemistry and emphasize the capability of the models to predict oxygen requirements based on the gene inventory of prokaryotic strains. Accordingly, aerobes and facultative anaerobes also have the highest overlap in the BacDive database (intersection size, 576 strains; Fig. 2A, red arrowhead).

Detecting distinct trait states in seemingly continuous data and determination of the underlying features

Continuous data pose a particular challenge since the boundaries between states and hence different classes are not easily defined. We chose the prokaryotic growth temperatures reported in the literature and accessible through BacDive as an example to address this problem (Fig. 3A). Certain prokaryotic strains can have a wide range of growth temperatures. As an example, Caenimicrobium hargitense DSM 29806 can grow between 4 and 65 °C, but is still considered a mesophile as its optimal growth temperature is about 28 °C. Therefore, temperature ranges were not considered, reducing the training data set to 75.5% of strains with temperature data where a single growth temperature was available in BacDive. However, these data points may not be the optimal growth temperature, as often only one temperature is tested or reported in the literature.

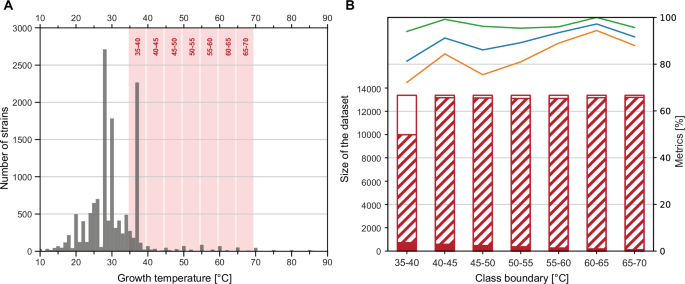

A Overview on growth temperature data available in the BacDive database, limited to strains with available genome sequences. Shown is the number of strains with reported growth in a specific temperature range of 1 °C. The temperature ranges that are excluded to delineate the datasets for the model runs depicted in B are shown in red. B Evaluation metrics of the THERMO model, trained and tested with different subsets of the data. The x-axis gives the specific 5 °C-interval applied to delineate the two classes in the individual model run. The y-axis for the column chart is on the left. Hatched areas of columns mark the amount of truly negative data, filled areas the amount of truly positive data, empty areas the amount of discarded data from within the 5 °C-interval. The y-axis for the line chart is on the right. Green, recall; orange, precision; blue, F1 score.

Bacteria are classified as thermophiles when exhibiting optimum growth temperatures of 45 °C or higher34,35. To establish a model (THERMO) that reliably differentiates between thermophiles and non-thermophiles and also allows to identify the underlying distribution of protein families, various limits for the classification of the two different states were tested (Fig. 3B). Our approach accounted for the fact that, in some cases, different publications report different growth temperatures for the same strain, which potentially could result in a classification of the same strain in different classes. To improve the resolution between the two classes in the THERMO model, data in a 5 °C gap at the boundary between the two states were excluded from the training. This latter strategy increased the F1 score by about 10% and hence the performance of the model.

Applying the 35–40 °C boundary (35 ≤ x < 40, with x being removed) in the modeling experiment returned the lowest value for all quality metrics, most likely because it had the lowest number of data points in the training dataset, from which numerous strains that grow at 37 °C had to be excluded (Fig. 3A). However, the model employing the 40–45 °C interval as boundary condition performed well; both precision and recall were particularly high when boundaries were set to higher temperatures (Fig. 3B). Shifting the classification boundary to higher temperatures, the precision first dropped and then increased markedly reaching a peak at 60–65 °C boundary temperature interval. However, the training dataset became increasingly unbalanced towards higher boundary temperature intervals. Since the number of data points in the training dataset was also significantly reduced at higher temperatures, the high values of the quality metrics are likely caused by overfitting.

The final THERMO model was built employing the 40–45 °C boundary and provided evidence of a genetic basis of the established classification of thermophiles (Table 2). Most evident was an increased relevance of protein families related to ribosome quality control (PF09382, PF05833), nucleotide repair (PF01612, PF03352), and for the metabolism of the compatible solutes spermidine and spermine (PF01564, PF02675). These results prove that the threshold temperature established decades ago to distinguish thermophilic from mesophilic prokaryotes has a specific biological basis and is not just a simple, man-made convention, further supported by other studies. Smith et al. identified the mesophile-thermophile temperature threshold more precisely and objectively35 and Zheng and Wu could identify misclassified thermophilic or mesophilic bacteria based on genomic information36.

In the future, the model performance could be increased further by training it only with optimum temperature values. Currently, too few data are available for optimum growth temperature; therefore 85% of the present BacDive dataset would have to be excluded from the model which in turn decreases the model performance substantially (Supplementary Fig. S2). Our findings emphasize the high demand of more standardized phenotypic information on prokaryotes for future machine learning and other applications of artificial intelligence.

Improving models through iterations of machine learning and expert curation

In contrast to oxygen requirement and growth temperature, motility as such is a binary trait since a bacterium can be either motile or not. However, motility is conferred by two types of mechanisms, flagellar motion and gliding. The first type can include several different flagellum arrangements and motor proteins. The latter does not depend on flagella for movement but instead on membrane proteins, type IV pili or polysaccharide jets. Existing models of previous studies diverged in terms of their capabilities for predicting motility. Whereas a successful model reached a higher accuracy (the only available metric) of 0.9315 other models returned only accuracies of 0.83 to 0.88 which are unsatisfactory13,14,37. A closer inspection of the underlying modeling procedures revealed that the superior models had been trained solely on motion conferred by flagella while the poor models did not distinguish between the known types of motilities. When limited to data sets with genome sequences, BacDive offers a total of 7090 standardized datapoints for the binary trait ‘motility´ with 2899 motile and 4191 non-motile strains, but the type of motility (flagella or gliding) is only known for less than 16.0% (464) of the motile strains (Fig. 4A). Among the latter, 68.7% (319) were known to exhibit flagellar motion that could be further classified based on the arrangement of the flagella. To test the impact of the mode of motility on the model quality, we started to build models with the whole motility dataset and then iteratively engineered the input of data.

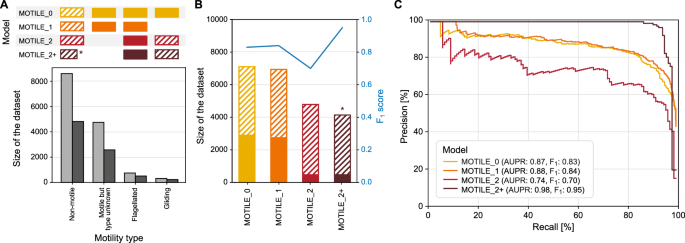

A Availability of data points for different types of motility in the BacDive database. Light grey columns give the total number of data in the BacDive database and dark grey columns the number of strains for which of these strains genome sequences were available. The information above indicates which datasets have been used as positive (filled) or negative (hatched) labels for the different models. B Size of the datasets available for the four MOTILE models (columns) and corresponding F1 scores (blue line). Hatched bars give numbers of negative, filled bars those of positive data (same as in panel A). The corresponding datasets for the different motility mechanisms are depicted in panel A. The dataset marked by * was reduced by strains that did contain more than 10 flagellum proteins. C Precision-recall curves showing the performance of the four different versions of the MOTILE model.

The initial MOTILE_0 model was trained using data for all strains from the BacDive database for which both, annotation of motility and genome sequences were available. The 7090 strains in the dataset had an acceptable distribution of 1:1.4 between the two classes ‘motile´ and ‘non-motile´ (Fig. 4B). As anticipated based on previous modeling attempts in the literature with just two classes13,14,15,37, the model performed mediocre with an AUPR of 0.87 (Fig. 4C) and an F1 score of 0.83 (Fig. 4B), a recall of 80.9% and a precision of 84.2%. A closer look into the feature importances of this first model revealed that decisions were based on the presence of flagellar proteins (Supplementary Table S1). In line with this observation, 89% of the false predictions for which the type of motility was known were annotated as gliding. Hence, this simple model of binary trait states fails to predict the motility which is based on a gliding mechanism.

In the next iteration, the input datasets were engineered to improve the model. First, strains known to exhibit gliding motility were omitted from the input datasets which resulted in a small increase of both, the AUPR and the F1 score to 0.88 and 0.86, respectively (MOTILE_1 model, Fig. 4C, recall: 82.0%, precision: 86.5%). Since the group of motile strains with an unknown type of motility is likely to contain a larger fraction of gliding bacteria, we limited the positive group to those strains with proven flagellar motility in our next iteration (MOTILE_2 model). This iteration is comparable to one of the modeling attempts of the past15. An in-depth analysis showed that protein families with highest importance for the predictions of the MOTILE_2 model were all part of the protein complex forming the flagellum (Table 4), except for one that is part of the Type III secretion system (PF18269), confirming that the model is based on the molecular inventory known for flagellar motility. Surprisingly, however, the performance of this model dropped to F1 score of 0.70 (Fig. 4) and precision and recall decreased markedly to 68.9% and 70.6%, respectively. While the imbalance of the dataset led to an increase in the AUC to 0.95, the AUPR lowered to 0.74, reflecting the performance drop mentioned above and further supporting the choice for AUPR over AUC. A comparison of the different classes revealed that a large number of the protein families that determined the predictions were not only limited to flagellated bacteria but also occurred in up to 26.5% of the non-motile strains, explaining the large number of false positives. The same is also true for the MOTILE_0 and MOTILE_1, but these false positives were diluted by the larger datasets in these first modeling iterations.

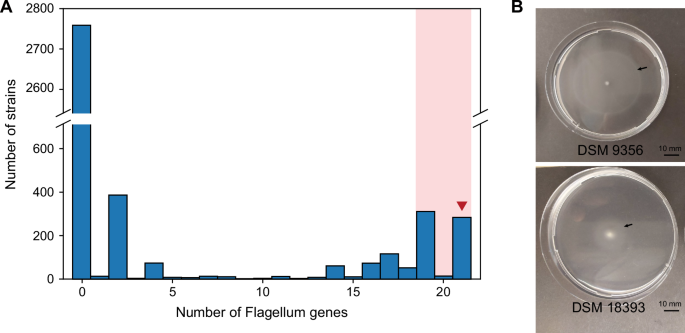

This seeming contradiction prompted us to analyze the actual distribution patterns of the protein families associated with flagellar motility (Table 3) across the genome sequences of non-motile strains. As expected, the largest part of these genomes (>75%) encoded none of the protein families. However, 557 strains (13.4%) had more than 10 of the flagellum protein domains, and of these 557 strains, 327 had even ≥ 19 of the queried 21 protein families (Fig. 5A).

A Number of flagellum genes in strains that were reported as non-motile in the literature. Shaded in light red are strains that were excluded from the negative training set in the MOTILE_2+ model. The red arrowhead indicates groups of strains of which representatives were tested for motility in laboratory experiments. B Experimental proof of motility of two bacterial strains on semi-solid agar. The arrows point to the edge of the opaque area to which the cells have moved. Both have been reported as non-motile in the literature.

In the next step, we removed the 327 strains that encoded large parts of the protein families needed to synthesize a flagellum from the data set (≥19 Pfams annotated, as highlighted by red shading in Fig. 5A). This improved the performance of the resulting MOTILE_2+ model significantly compared to the MOTILE_2 model, reaching an F1 score of 0.95 (Fig. 4) and a precision of 93.8%. The iterations of the MOTILE model clearly exemplify how machine learning models can be improved significantly when several iterations of modeling are conducted, and these alternate with assessments of the previous model output based on the knowledge of the underlying biological mechanisms.

The 327 strains that were found to contain the majority of protein families (Pfam) associated with flagellar motility represent interesting targets for future detailed growth experiments because motility genes might not be expressed and hence not been detectable under the specific cultivation conditions employed in previous laboratory studies. For instance, certain bacteria (e.g. Actinoplanes) are immotile in their vegetative stage and only form flagellated spores. Of the strains with 21 protein families for flagella genes, a sample of 5 was selected to test for possible mobility. Four of them showed mobility in soft agar (Supplementary Table S2), one of which moved particularly far in the experiment (Fig. 5B, DSM 9356). This experiment serves as a proof-of-concept to demonstrate how a machine learning model can also identify phenotypes that are falsely reported in the literature.

Model performance across distinct phyla

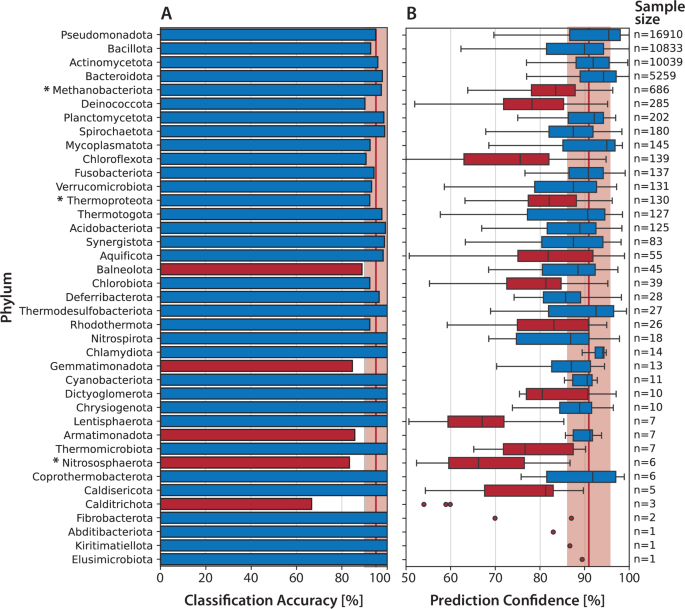

Our evaluation of model performance across different bacterial and archaeal phyla demonstrates high overall accuracy, with a median classification accuracy of 94.9% and a median prediction confidence of 91.6% (Fig. 6). This generally robust performance highlights the suitability of our Random Forest-based approach for predicting phenotypic traits from genomic annotations across diverse microbial lineages. However, caution has to be exercised when interpreting results for phyla with low representation, particularly the 11 phyla with fewer than 10 annotated traits each, as the limited sample size renders their accuracy and confidence metrics statistically uncertain.

A Classification accuracy (percentage of correctly predicted phenotypic traits) per phylum. B Distribution of prediction confidences (probability scores assigned by Random Forest models) across phyla. Phyla belonging to the archaeal domain are marked with an asterisk (*). The vertical red lines indicate the overall median across all phyla, with a surrounding 5% interval shown as a shaded red area. Phyla with a classification accuracy or median prediction confidence below the 5th percentile are highlighted in red, indicating reduced performance in these groups. Sample sizes for each phylum are shown on the right. Please note that these numbers represent the number of annotated traits per phylum, not the number of strains.

Well-studied phyla with a high sample representation such as Bacillota, Pseudomonadota, and Actinomycetota consistently yielded high classification accuracy and prediction confidence, underscoring the effectiveness of our models when sufficient genomic and phenotypic data are available. Interestingly, certain less represented phyla, such as Mycoplasmatota and Thermodesulfobacteriota, also showed excellent prediction accuracy, indicating that the genomic markers were tightly associated with phenotypic traits, conserved and characteristic for these groups. In comparison, archaeal phyla, although taxonomically distinct from bacteria, displayed notably lower median prediction confidence despite moderate accuracy of about 95%, except for Nitrososphaerota, whose extremely limited sample size (n = 6) further complicates a reliable interpretation. The reduced confidence in archaeal predictions might be caused by unique genomic features and differences in annotations as compared to bacterial reference genomes, emphasizing the need for further sequencing and annotation refinement within the archaeal domain.

Notably, certain bacterial phyla yielded unexpectedly low performance metrics. Calditrichota, which were only represented by one strain with 3 annotated traits, displayed both very low classification accuracy and prediction confidence, which may reflect unique and/or divergent genomic signatures of this particular strain that are poorly captured by the current feature representation, or significant annotation gaps. Despite acceptable classification accuracies (~90%) and coverage by 83 and 34 species, respectively, Deinococcota and Chloroflexota also exhibited median prediction confidences below 80%, which can be attributed to an increased genomic variability within these taxa. Both phyla contain species highly resistant to extreme environmental conditions, and are known extremophiles. These adaptations are reflected in unique molecular signatures, such as conserved signature indels (CSIs) and proteins (CSPs) in Deinococcota38. Members of Chloroflexota are known to comprise a diversity of phenotypes, including anoxygenic phototrophs, aerobic thermophiles and the use of unusual or even toxic compounds as electron acceptors39. This diversity makes protein-based prediction challenging. However, since classification accuracies are still acceptable in both cases, we assume high reliability of the models even at the lower confidence values.

Our findings suggest that phylogenetic representation alone is not the primary determinant of model robustness. Instead, the extent to which a phylum is metabolically or ecologically distinct appears to have a greater effect on predictive performance. Taxa adapted to specialized or extreme niches—such as Deinococcota and Chloroflexota—pose particular challenges due to their unique genomic features and divergent protein repertoires, which are often underrepresented in standard annotation databases. Thus, beyond increasing taxonomic coverage, improving model generalizability will require incorporating a broader spectrum of functional and environmental diversity into training datasets.

Exploiting high quality predictions can significantly increase the body of knowledge in databases

For the predictions of the different models to augment the phenotypic data in databases such as BacDive, the quality of the predictions needs to be evaluated. Accuracy is an often-employed metric for evaluating the performance of models14,15,37. However, accuracy is not a good choice as soon as the input data are imbalanced which is often the case for biological datasets. To evaluate this further, we also included a dataset that would otherwise be inappropriate for the application of machine learning because of its high imbalance in the training dataset. The ACIDO model should predict whether a strain can grow at a pH below 5 or not. As mentioned, the particular input dataset was highly imbalanced with 2483 strains being in the negative but only 45 strains being in the positive class. Most of the strains were indeed correctly predicted as negative and the ACIDO model had an accuracy of 97.3%, falsely suggesting a very good performance. After all, the model had a precision of only 13% and a recall of 75%, emphasizing that this metric in inappropriate for such kind of data. Therefore, we evaluated a number of metrics established (see Materials and Methods) for all eight different machine learning models developed in the present study to assess the quality of their predictions (Table 4, Supplementary Fig. S3A).

Besides the ANAEROBE, AEROBE, THERMO, MOTILE2 + , ACIDO models, we also built three additional models for which sufficiently large datasets are available through the BacDive database (Fig. 4). The model GRAMPOS was used to predict Gram-positive bacteria, SPORES to predict the capability of a strain to form spores and PSYCHRO was established to predict psychrophily (i.e., a growth optimum below 20 °C).

As stated, accuracy is a poor choice to evaluate the performance of models as soon as data are unbalanced, which is demonstrated by the fact that all models have an accuracy of more than 90% (Table 6). Although ROC curves are a good means to visualize the performance, the AUC shares similar weakness as accuracy. The area under the precision/recall curve (AUPC) has proven to be a better visualization for unbalanced datasets40. Precision, recall, specificity, and NPV are metrics that summarize parts of the confusion matrix and cover different aspects of the quality of a model. These metrics can be relevant to assess specific aspects, e.g. if false positives must be avoided, a high precision is needed, and the recall is less important. However, none of those metrics can reflect the model quality by itself, e.g. the PSYCHRO model has a high specificity of 97.7%, but a drastically low precision of 6.2%. Thus, more complex scores that take several aspects of the confusion matrix into account were evaluated. Although the normMCC is the only metric that takes all four rates of the confusion matrix into account, we selected the F1 score because it is easier to interpret and to compare between models while being almost as sensitive to imbalanced data as the normMCC metric. However, it should be mentioned that the true negative samples are not considered for the calculation of F1 scores which is shown by the AEROBE model that had higher F1 scores in comparison to normMCC, since the latter takes also the negative predictive value (NPV) into account. A cutoff of 0.8 was applied to the F1 score of a model, thus, the highly imbalanced and underperforming models PSYCHRO and ACIDO were not used to generate predictions from prokaryotic genomes and integrate them into the BacDive database.

We then applied the six selected models to all 15,938 genomes in the dataset. This generated a new dataset with 95,628 data points. Of these, 45,232 overlapped with experimental data existing in the database and thus can be used to check for possible errors in the experimental results. The remaining 50,396 data points constitute completely new information that was lacking so far and thus complement the existing 1,247,971 manually curated phenotypic data in the BacDive database. For the selected traits, this represents an increase in data availability of more than 160%. It is also worth mentioning that more than 2000 strains had no single datapoint for the predicted phenotypic traits before and were thus significantly enriched in strain-associated information (Supplementary Fig. S4). In summary, 53% (50,396) of the predicted data were newly generated information, 45% (42,624) of the predictions were for already manually curated data in BacDive and agreed with them, whereas only less than 3% (2608) of all predicted data were for manually curated data that contradicted the existing information (Supplementary Fig. S4B). In only 113 cases, predictions contradicted the existing BacDive data with over 90% confidence. In 27 cases, the predictions identified actual errors in the BacDive annotation, allowing for corrections. 32 cases related to oxygen tolerance predictions where literature listed both anaerobic and aerobic growth, demonstrating that, in fact, predictions and original BacDive entries differed but were both correct.

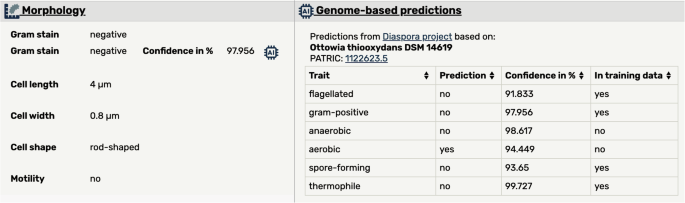

Because of the high quality of the predictions obtained by the six machine learning models established, we included the predicted data alongside the manually curated data in the BacDive web interface (Fig. 7, left). Additionally, we show an overview on all predicted data for the organism, as well as the information from which genome the data has been derived (Fig. 7, right).

Left: Predicted data are integrated alongside manually annotated data. The shown confidence value and an AI icon indicate that the dataset was derived computationally. Right: Table gives an overview on the predictions currently available for a strain, as well as the genome from which the prediction has been derived.

Perspectives and limitations

The results of our analysis highlight the importance of the quantity and quality of balanced training data for generating trustable predictions for phenotypic traits of prokaryotes from their genome sequences. Of similar importance is the consideration of available biological knowledge. As demonstrated in this work, generalized models easily fail to predict the actual phenotype, if well-known differences in the underlying biology are not taken into account during the design of the model. Our approach makes it possible to explicitly determine the key underlying genetic features and hence offers the opportunity for a deeper biological interpretation of the results. Not all commonly used metrics are equally suitable to evaluate machine models, though. Metrics that integrate several quality aspects, in particular normMCC or the F1 score should preferably be applied to assess the quality of AI predictions. If these conditions are met, highly performing machine learning models generate valuable novel information that can be used to enrich existing databases. In our exemplary study, six models yielded 50,396 completely new datapoints for 15,938 strains and allowed to challenge 45,232 existing datapoint yielding consensus in more than 97% of the cases. Thus, high quality, machine learning models that take biological insights into account clearly provide the means to significantly improve the availability of data in open databases for subsequent functional studies.

To enhance the predictive performance and biological relevance of machine learning approaches, a systematic expansion of the presently available phenotypic and genomic datasets remains crucial. Our analyses highlight that the prediction of phenotypic traits by machine learning could be improved significantly if the quality and quantity of genomic data were increased (Fig. 1). Targeted sequencing efforts towards strains from microbial culture collections that have already been phenotypically characterized, would be particularly promising.

In parallel, future research should focus on the experimental validation of computational predictions, such as laboratory assays on genomically characterized but phenotypically unannotated strains. Ultimately, applying these models to metagenome-assembled genomes (MAGs) would extend predictions towards growth conditions of even uncultivated microbes. This would also require deducing growth medium composition needed for cultivation. In the context of the MediaDive database41, we are currently exploring complementary approaches, aiming at the prediction of nutrient and chemical requirements of uncultured microorganisms. Such integrated strategies would considerably strengthen both the reliability and applicability of predictive phenotype modeling in microbiology.