Preprocessing and institutional pairwise analysis of clinical free text

The final dataset consisted of 1,607,393 steps. 44 institutions covering 48 different anesthesiology CPT codes (Figure S2) including 1,526,911 containing CPT codes that were removed from the 3,566,343 steps due to missing 432,039 and not shared among all institutions. The procedure was a majority of women (866,284; 53.9%), ages 49.3. [22.4] Year, average [SD]. Using the American Anesthesiology Association's Physical Status Classification Score (ASA), a clinically acceptable measure of patient severity, most were ASA 2 (693,590; 43.1%) or ASA 3 (629,978; 39.2%). Procedures for pediatric patients (190,353 or 11.8%), ASA emergency status (88,432 or 5.5%), and the following three most common anesthesia CPT codes: 00840 (141,538, 8.8%), 00790 (126,691, 7.9%), 00400 (119,026, 7.4, 7.4, 7.4, 7.4.

Table 1 provides an example of the procedural text. The preprocessing consisted of one of three techniques described in the method. The largest preprocessing used manual extensions of acronyms and misspelled words from two independent doctors. Of the 4902 manually modified strings, 120 were rated simultaneously, indicating a simple inter-kappa reliability of 0.9238 (0.8643–0.9834 95% reliability). There were 216,763 unique terms used in all surgical procedures texts. Minimal preprocessing reduced vocabulary to 64,249 (original 30.0%), with Cspell and maximum reduced to 37,371 (17.2%) and 37,098 (17.1%). Without preprocessing, the average vocabulary overlap between the two institutions was 23.5%. [18.3% SD, 0.1–87.7% min–max]. Most pairings (89.1%, 1686/1892) showed less than 50% overlap (Figure S3). With minimal preprocessing, the average vocabulary overlap increased to 46.3% [18.2% SD, 9.7–92.1%]cspell 52.2% [17.9%, 12.5–92.7%]and up to 52.4% [17.9%, 12.6–92.6%] (Figure 1). A similar increase in jacker similarity has been demonstrated from minimal to Cspell to maximum pretreatment across engines (Figure S4). Approximately 10% of the words were identified as spelling misprints by Cspell, and 9% of the steps included at least one spelling text.

Composite histograms of individual pairwise vocabulary overlap with different text preprocessing methods. Each pretreatment method represents a pairwise comparison for 1892 (Institution a vocabulary Facilities b where a ! =b). Each preprocessing technique between individual institutions improves vocabulary reduction and overlap.

Three pretreatment methods were used to evaluate institutional models across all institutions, resulting in 132 models and 5,676 pairwise ratings. Using minimal pretreatment, the average self-facility accuracy was 92.5% [2.8% SD] At 0.923 [0.029] F1 (Table 2). The average reduction in accuracy and F1 score for self-non-self data was 22.4%. [7.0%] and 0.223 [0.081]. Pre-processing improvement performance (minimum to maximum pre-processing: +0.51% [2.23%] Accuracy; +0.004 [0.020] F1 score). Weakly correlates with accuracy and F1 scores of vocabulary overlap models (average R)2 0.16, Figure 2). Jaccard similarity was not only weakly correlated with model accuracy, but was worse than vocabulary overlap (average R)2 0.08, Figure S5).

F1 score vs overlap and KLD. Each point represents an individual pair of non-self institutions. Blue dots are compared to overlap, while red dots are compared to KLD. A minimum data preprocessing level was used for modeling.

Models from combined institutional data

The “80:20” combination data model produced averages [SD] 87.7% accuracy [4.0%] F1 score of 0.879 [0.386]. Compared to individual models tested with self-data, the 80:20 model suffered poor performance for each institution, producing 4.88% [2.43%] Low accuracy, 0.045 [0.020] Low F1 score. For non-self data, the model performed better on all instances, producing 17.1% [8.7%] Higher accuracy and 0.182 [0.073] Higher F1 score. 44 “hold-out” models yielded an average accuracy of 84.6% [5.1%] and 0.848 [0.491] F1 score. The holdout model was worse on all comparisons compared to individual models tested with self-data (8.01% [3.72%] Low accuracy, 0.075 [0.034] (Low F1 score). For non-self data, the holdout model has improved performance across all instances, with an average accuracy of 17.1% [8.7%] and F1 score 0.167 [0.073].

Kullback – Leibler's divergence

KLD metrics were created for individual words, CPT, and Composite (KLD_WORD, KLD_CPT, and KLD_COMPOSITE). Compared to vocabulary overlap, all KLD metrics show improved correlation between accuracy and F1 scores (Fig. 2, Fig. S6), while composite KLDs show R.2 Of 0.41. As KLD values increased (the data distribution was not similar) the performance of the model deteriorated. Literature overlap alone reduced the correlation between R2 and 0.15-0.16 (Figure S6). kld_word indicates r2 0.23 and KLD_CPT AN R2 Of 0.33. The average Pearson product-moment correlation for composite KLD was -0.8127 (min: -0.882, max: -0.644), with all institutional pairs showing strong negative correlations. On the other hand, pairwise vocabulary overlap resulted in a weaker negative correlation of -0.4423 (min: -0.674, max:0.0091).

Institutional clustering

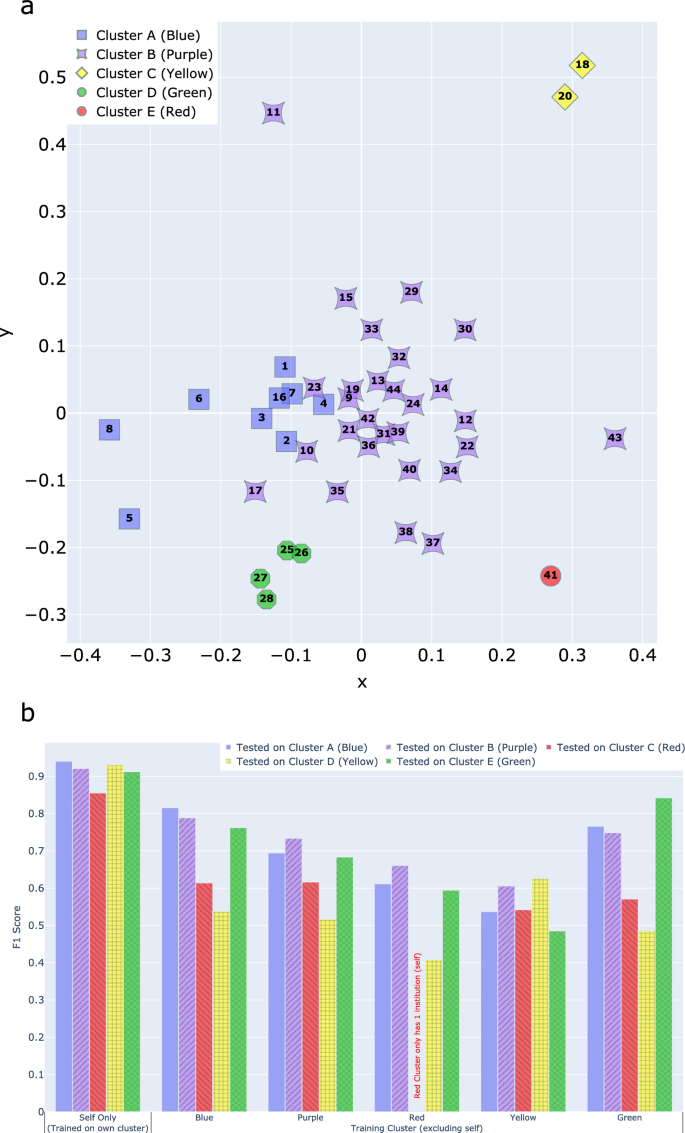

The Composite KLD was used for K-Medoid clustering, resulting in nine blue cluster institutions, 28 purple, one red, two yellow, and four greens (Fig. 3a). Tested in non-self-institutions, the model performed better on intra-cluster and inter-cluster data: blue (81.6% [6.8%] Accuracy [SD] vs. 77.0% [6.6%]), purple (73.4% [8.1%] vs. 66.9% [9.5%]), green (83.9% [1.6%] vs. 74.5% [6.8%]), yellow (61.0% [8.8%] vs. 56.8% [6.6%]). The last analysis was not possible in red (single facility), but showed 62.8%. [5.9%] Intercluster accuracy. For F1 Scoring: Blue (0.816 [0.072] vs. 0.767 [0.077]), purple (0.734 [0.092] VS 0.664 [0.105]), green (0.842 [0.015] vs. 0.735 [0.084]), yellow (0.627 [0.092] vs. 0.578 [0.081]), and red (n/a vs 0.633 [0.105]) (Figure 3b).

(a) A two-dimensional Cartesian planar representation of clustering showing five non-overlapping, unbalanced clusters derived from K-medoid clustering using Composite KLD. (b) Testing clustering groups to F1 scores. Self-facility testing is the only one. Scores are reported as averages. A minimum data preprocessing level was used for modeling.

Insufficient generalization of single red cluster institutions (average accuracy and 61.4% F1 score [10.9%] and 0.608 [0.110]compared with 72.2% [7.2%] and 0.720 [0.087] (for all other institutional combinations). Similarly, institutions 11, 37, 38, and 43 performed relatively poorly compared to purple peers (intra-cluster accuracy and 62.9% F1 score [9.6%] and 0.594 [0.118] Compared to 75.2% [6.3%] and 0.757 [0.062]). Compound KLD values deviate from cluster 2.2 [0.9] VS 1.2 [0.5]. Comparing the institutional demographics (Table S1), the facility 11 data included 93.1% of the pediatric patients procedure and an average of 11.8% of the data set. Outliers also showed a larger proportion of one patient with ASA and a lower proportion of major surgical procedures.