Find out what the recently released Docker Model Runner is and how you can use it to deploy AI applications.

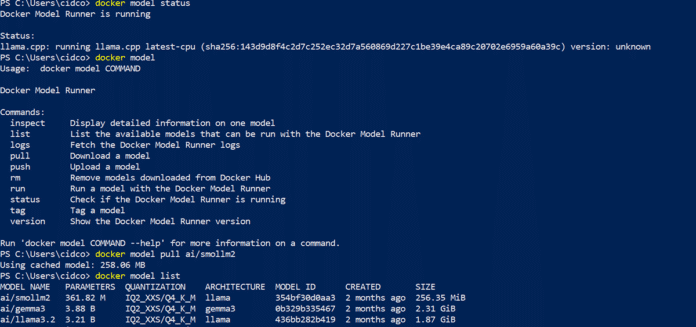

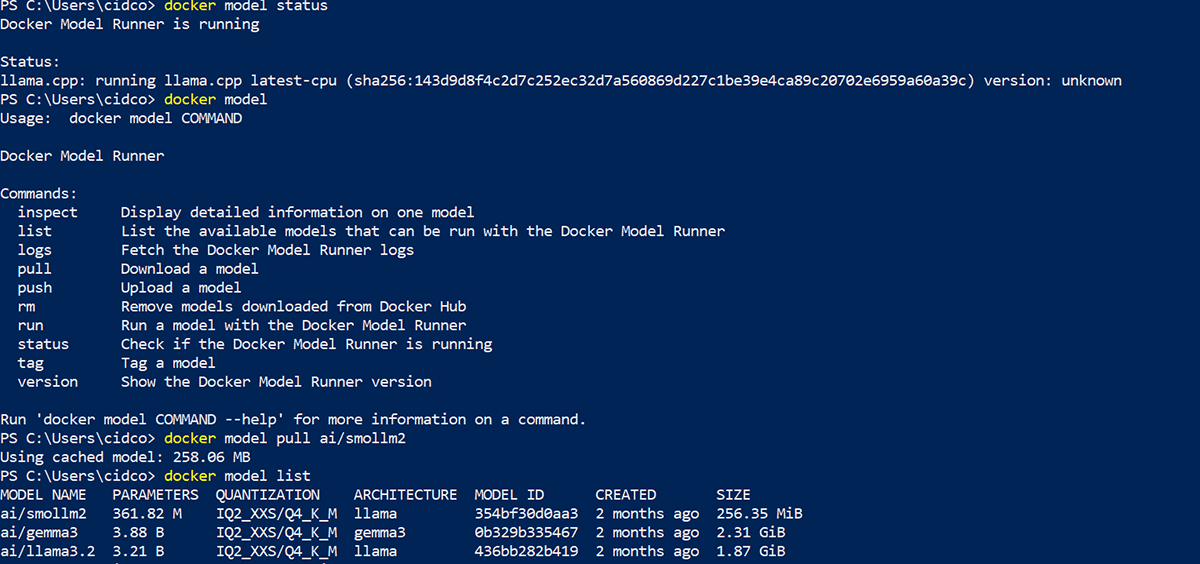

Docker has released Docker Model Runner to enable developers to run or test LLM-based applications locally by pulling models to run in Docker Desktop (minimum required version is 4.40) on localhost. This beta feature must be enabled in Docker Desktop settings. Various models such as ai/smollm2, ai/deepseek-r1-distill-llama, and ai/llama3.2 are available from the following Docker model registries: https://docs.docker.com/ai/model-runner/and any of the required models on your local host as per your requirements. After enabling Docker Model Runner, you can use the commands shown in Figure 1 to work with Docker models.

Let’s use the ai/smollm2 model with an AI agent. This model is built on a Python application that uses Model Runner as the backend for all interactive chats. The following code snippet calls Docker Model Runner.

response = requests.post(

# f”{MODEL_RUNNER_URL}/engines/llama.cpp/v1/chat/completions”,

f”{MODEL_RUNNER_URL}/engines/v1/chat/completions”,

json={

“model”: “ai/llama3.2:latest”, # Example model, can be changed

“messages”: [{“role”: “user”, “content”: prompt}],

“max_tokens”: 100

}

)

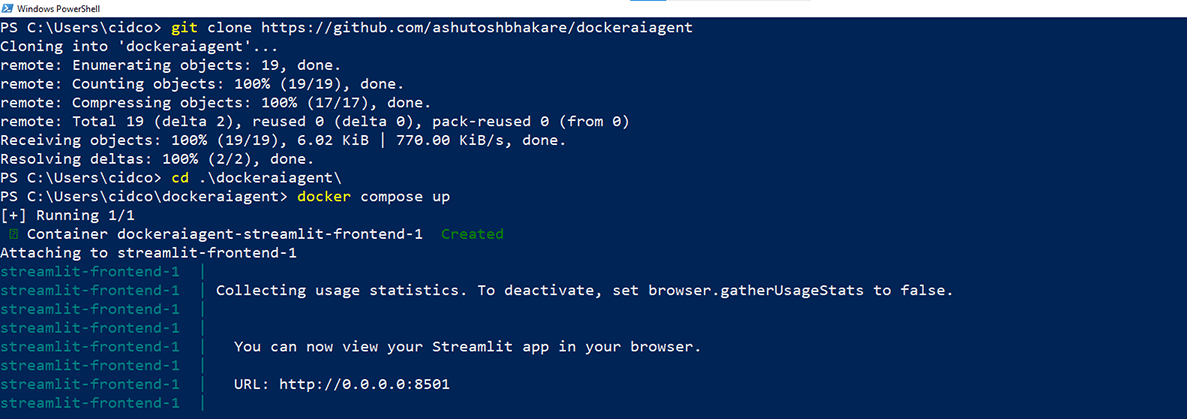

To deploy this application, simply clone the git code. (https://github.com/ashutoshbhakare/dockeraiagent) As shown in Figure 2.

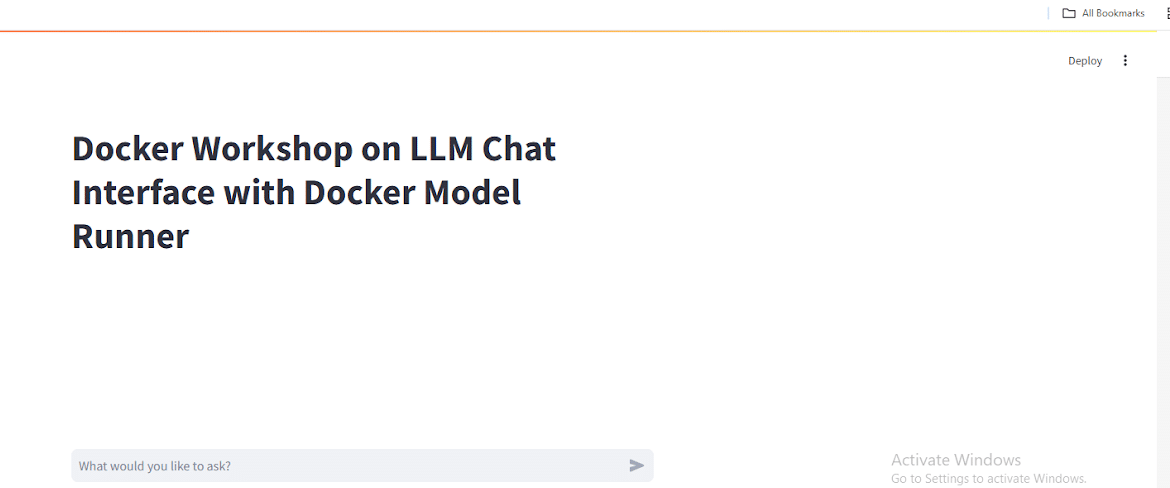

This will build the image and run the container on port 8501. You can access the image on your local host, as shown in Figure 3.

Advantages of Docker Model Runner

Run everything locally

You don’t need to get anything from web live. This reduces latency issues and enables security compliance.

Direct access to hardware GPU

Docker Model Runner uses llama.cpp, reducing dependence on virtualized environments.

Deploy all AI models at once

As a developer, you don’t have to worry about managing multiple models from different locations.

AI models can run on your local machine

Docker Model Runner allows you to interact with AI models directly on your local machine.

Apart from Docker Model Runner, Docker also recently released Docker Ask Gordon and the Docker MCP toolkit. For more up-to-date information, please visit here docker.com.