The detection of buried objects has long been a critical aspect of both military operations and humanitarian efforts, such as minefield clearance1. While the ability to detect subsurface anomalies is a fundamental requirement, it is not sufficient on its own to ensure operational success or safety. Equally important is the capacity to accurately classify and assess the potential threat posed by the detected objects. This is particularly crucial when dealing with metallic components, which are often indicative of explosive devices or other hazardous materials2. Effective classification enables the differentiation between dangerous objects and harmless trash, thereby reducing false alarms and optimizing resource allocation. Moreover, precise identification of threat levels contributes significantly to safeguarding personnel, enhancing situational awareness, and improving the overall efficiency and reliability of minefield clearance and subsurface surveillance missions.

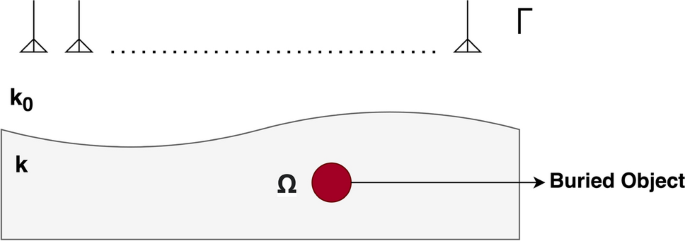

Considering buried objects, Ground Penetrating Radar (GPR) is the most commonly employed technique for its detection3. One of the primary advantages of GPR is the ability to non-invasively detect subsurface anomalies, making it suitable for a variety of military and civilian applications. GPR generates graphical representations, also known as radargrams, of reflected electromagnetic signals that require expert interpretation. This allows for the inference of object shape, depth, and composition, particularly when data is collected over a grid and reconstructed into three-dimensional models. However, a notable limitation of GPR is its reduced performance in high-conductivity soils where signal attenuation can significantly affect data quality and interpretability4.

Microwave systems represent a valuable tool for extracting information about the objects in unreachable places due to their capability to penetrate a range of non-metallic and dielectric materials5. These systems function within the microwave spectrum, transmitting electromagnetic waves that interact with underground anomalies and return signals that can reveal an object’s location, size, and material properties6. A major strength of microwave-based techniques lies in their high spatial resolution, allowing for more accurate identification and positioning compared to some conventional subsurface sensing methods. Moreover, the non-contact nature of microwave systems enhances their safety and usability, particularly in dangerous or hard-to-access areas. Consequently, microwave-based systems have gained widespread adoption across both academic research and industrial applications7,8,9,10,11. Microwave systems for buried object detection are limited by signal attenuation in soils with high moisture content or electrical conductivity, which reduces penetration depth and detection accuracy. Furthermore, complex subsurface environments can cause scattering and multipath effects, complicating the interpretation of returned signals. Additionally, differentiating between materials with similar dielectric properties remains a significant challenge, potentially leading to ambiguous identification of buried objects.

To address the inherent challenges associated with microwave-based buried object detection, we propose a data-driven artificial intelligence approach. By leveraging advanced machine learning algorithms, this approach can enhance the interpretation of complex microwave measurement data, improve detection accuracy, and reduce false alarm rates. The AI framework is capable of learning intricate patterns and subtle variations within the electromagnetic signals, thereby overcoming limitations posed by conventional signal processing techniques and enabling more reliable identification of buried objects in diverse and challenging subsurface conditions.

Among the research on artificial intelligence–driven detection and enhancement methods, Chiu et al. make a notable contribution to the electromagnetic inverse scattering problem for buried conductors through the use of a deep convolutional neural network (CNN)12. Conventional iterative approaches to this problem typically require repeated Green’s function calculations and are therefore computationally intensive, sensitive to noise, and difficult to scale for real-time applications. In contrast, the CNN-based framework developed by Chiu et al. directly reconstructs conductor geometries from scattered electromagnetic fields, effectively bypassing these computational bottlenecks while maintaining strong reconstruction accuracy.

Numerical experiments demonstrate that the proposed method achieves root mean square errors generally below 18%, even for irregular and complex conductor shapes, outperforming established architectures such as U-Net. The ability to robustly recover diverse geometries underscores the model’s broader applicability across domains where electromagnetic imaging is essential, including nondestructive industrial testing, underground pipeline monitoring, biomedical imaging, and military detection. By integrating data-driven modeling with principles of electromagnetic theory, this work illustrates the potential of deep learning to overcome the limitations of traditional inverse scattering methods and provides a foundation for future advances in efficient, high-resolution subsurface imaging.

A complementary line of research emphasizes improving the speed and computational efficiency of buried object characterization by minimizing reliance on extensive preprocessing stages13. In this work, the authors proposed a time–frequency regression model (FRM) capable of directly analyzing raw ground-penetrating radar (GPR) signals. This design substantially accelerates the interpretation workflow, thereby reducing detection latency and improving the responsiveness of subsurface sensing systems. Rather than relying on conventional intermediate steps such as background removal, filtering, or handcrafted feature extraction, the FRM employs data-driven surrogate modeling to estimate object parameters in a more direct and computationally lightweight manner. This streamlined pipeline not only improves processing speed but also highlights the potential of regression-based surrogate models to enhance the practical deployment of GPR-based detection technologies in real-time scenarios.

Despite these advantages, the study also presents certain limitations that restrict its broader applicability. The proposed model was validated exclusively on a single cylindrical perfect electric conductor (PEC), a relatively simplified test case compared to the variability encountered in real-world conditions. Such a narrow evaluation framework limits confidence in the method’s ability to generalize across diverse subsurface environments, where buried targets may differ significantly in terms of geometry, material heterogeneity, and electromagnetic scattering characteristics. Addressing these constraints through more comprehensive validation on diverse object classes and realistic soil conditions would be a critical next step to establish the robustness and scalability of this approach for practical field applications.

A recent line of investigation has advanced the classification of buried objects by introducing a second-order deep learning framework that extends the conventional convolutional neural network (CNN) paradigm14. In this approach, ground-penetrating radar (GPR) images are first processed to extract hyperbola-shaped thumbnails, which then serve as inputs to a baseline CNN. Unlike standard models that rely solely on first-order feature representations, this method computes covariance matrices from intermediate convolutional layer outputs, thereby capturing second-order statistical dependencies that provide richer structural information about the feature maps. These covariance descriptors are subsequently processed through specialized layers designed to handle symmetric positive definite (SPD) matrices, enabling the network to model complex spatiotemporal patterns and discriminate subtle characteristics of buried objects more effectively than traditional CNN-based pipelines.

Empirical evaluation on a large and heterogeneous dataset demonstrated that the second-order model consistently outperformed both shallow GPR-specific neural networks and widely adopted CNN architectures from computer vision, particularly under conditions where training samples were scarce or mislabeled. The robustness of the model was further validated across variable environmental conditions, including differing soil properties and weather modes, underscoring its potential for real-world deployment. Beyond subsurface detection, the authors argue that this architectural innovation has broader applicability, with possible extensions to radar-based remote sensing and biomedical signal analysis domains such as electroencephalography (EEG), where noisy inputs and limited annotated data are common challenges. Despite these promising findings, the study acknowledges that the increased architectural complexity and associated computational demands may hinder large-scale or resource-constrained implementation, highlighting the need for future research on optimization strategies to balance accuracy with efficiency.

Generative adversarial networks (GANs) are applied to solve the inverse scattering problem for buried dielectric objects, addressing challenges faced by traditional iterative methods such as nonlinearity, high computational cost, and incomplete data in15. The GAN framework consists of a generator that produces realistic images and a discriminator that distinguishes between real and generated images, refining the results through an adversarial process. However, GANs are known to be unstable to train, which may affect convergence and reconstruction quality16. Compared to the U-Net, GAN demonstrates superior image reconstruction accuracy and reliability for objects with varying dielectric properties and shapes. This approach represents a novel application of deep learning techniques for enhanced imaging of buried objects.

Recent advancements in ground-penetrating radar (GPR) and microwave imaging have increasingly leveraged deep learning techniques to enhance subsurface object detection, clutter suppression, and data inversion accuracy. Ni et al. (2020) introduced a robust autoencoder (RAE)-based framework for clutter suppression in GPR B-scan images, demonstrating that decomposing radar data into low-rank and sparse components enables effective separation of background clutter and target responses17. Their method outperformed classical approaches such as mean subtraction, SVD, RPCA, and MCA on both simulated and real GPR data, yielding cleaner and more interpretable subsurface imagery. Building on this, Ni et al. (2022) applied conditional generative adversarial networks (cGANs) to the same problem, developing a data-driven approach that maps cluttered B-scan images to clutter-free counterparts18.

By augmenting simulated clutter-free data with real non-target measurements, their model improved generalization to field data and achieved superior clutter suppression and computational efficiency compared to state-of-the-art low-rank and sparse decomposition methods. While these studies primarily focused on improving signal clarity, Wang et al. (2025) extended deep learning applications toward quantitative inversion of subsurface parameters by proposing a semisupervised neural architecture for reconstructing the relative permittivity of buried defects19. Their model integrated a SAR feature fusion branch and multidimensional attention module to enhance the representation of defect boundaries, achieving strong generalization to new defect types and outperforming existing inversion methods even under limited annotated data conditions. In parallel, Cheng et al. (2024) advanced the interpretability of GPR data through a signal processing approach based on the high-order synchrosqueezing transform (HSST), which provided superior time–frequency resolution and precise reconstruction of geological structures in both simulated and real mining environments20. Collectively, these studies underscore the rapid evolution of deep learning and advanced signal processing techniques in GPR research from clutter suppression and feature extraction to data inversion and structural interpretation. However, most of these approaches rely heavily on simulated datasets or specific geological assumptions, leaving a gap in experimentally validated, data-driven microwave detection systems for real buried object classification. Addressing this gap, the present study proposes a deep neural network trained exclusively on experimental multi-frequency S-parameter data, aiming to achieve robust and automated classification of buried threats under realistic measurement conditions.

In this study, a neural network model was developed and trained using real experimental data provided by Mechanical and Chemical Industry, ensuring practical relevance and robustness in performance. The input features for the model consisted of scattering parameters (S-parameters), which capture the frequency response characteristics of the system and provide rich information for identifying buried objects. The model is designed to classify detected objects into three distinct categories: safe (no threat), metal-based threat, and plastic-based threat, enabling more precise risk assessment for buried threats. By leveraging these experimentally obtained S-parameters, the neural network learns complex patterns associated with subsurface anomalies while maintaining computational efficiency. This approach demonstrates the potential for efficient and accurate buried object detection and classification using data-driven models grounded in real-world measurement data.

It is worth noting that our earlier study21 laid the foundation for this line of research by integrating a lightweight neural network model with a microwave-based detection system. In that work, 16 \(\times\) 16 S-parameter measurements were employed, which were then transformed into a compact 256-dimensional feature vector, allowing for efficient binary classification of objects into threat and non-threat categories. The framework achieved remarkably high accuracy and demonstrated strong potential for defense and security applications. However, that study was limited in several respects: the dataset comprised only 583 samples in total, of which 48 represented threat cases, and the model operated under a single-frequency setting with a relatively simple network structure.

Building upon these findings, the present study introduces several key advancements that substantially extend the prior work. First, the input dimensionality has been expanded by employing 21 \(\times\) 21 S-parameters, enabling a more detailed representation of the electromagnetic responses. Second, the dataset has been dramatically scaled up to 20,405 samples, including 1680 threat cases, and importantly, the data used in this work are entirely distinct from those employed in21. This increased volume and diversity of measurements not only enhances statistical robustness but also ensures improved generalization across varied subsurface conditions. Furthermore, unlike the single-frequency setting of the earlier study, the current work leverages multi-frequency data, thereby incorporating additional spectral complexity and necessitating a more sophisticated neural network architecture. Finally, the classification task has been extended beyond a binary framework to a three-class setting, distinguishing between safe (no threat), metal-based threat, and plastic-based threat. This refined categorization offers a practical advantage by differentiating the nature of threats, allowing for tailored precautionary measures depending on whether the detected object is metallic or plastic. Collectively, these advancements illustrate the progression from our earlier work toward a more robust and data-rich solution for buried object detection and risk assessment.