Loading…

After a lavish holiday meal, you're relaxing in the living room when your uncle starts flipping through a vertical video. “Did you see one of the cats snatching the snake from the man's bed?” he asks.

Is it true? Is it fake? I feel my head starting to hurt.

“We're overwhelmed by slop,” says Mike Caulfield, co-author of the book. Verified: How to think calmly, be less gullible, and make better decisions about what to believe online.. “It just floods the zone and at some point your mental capacity becomes exhausted.”

But Caulfield and other experts say there's no need to give in to despair, at least for now. There are some simple do's and don'ts you can use to assess the reliability of the information you see online.

don't think everything is fake

Because feeds are biased, it's easy to think: all If you look online it's fake. But that bias is just as dangerous as believing that everything you see is real, warns Corinna Koltai, a senior researcher at Bellingcat, an organization specializing in open source research.

Bystander video remains a highly important source of evidence for crimes committed by individuals and law enforcement. Researchers call it the “liar's dividend,” because when people stop believing these videos, it becomes easier for bad actors to claim real events are fake to avoid responsibility.

“I think that's one of the big risks when it comes to this kind of content,” Koltai said. “It's not about someone believing a fake video, it's about people disbelieving a real video.”

Koltai and his colleagues say it's especially important to carefully consider videos that may provoke a strong emotional response or that contradict one's personal beliefs. Real-life videos are often complex situations that can provoke reactions that challenge our understanding of the world. That said, many fake videos are designed to do just that, to encourage engagement.

Notice some quick features in the video

Hany Farid, a professor at the University of California, Berkeley who studies manipulated media, said AI-generated videos are already good and are improving rapidly. Even experts can be fooled, he says. “I’ve been doing this every day for a long time and it’s really hard. Really difficult. “

But there are some very simple features to tell if the content you're watching is AI. The biggest tip is the length of the video.

“Most companies limit the length of AI videos because creating these videos is very computationally expensive,” Farid said. Many videos end up being only 8 to 10 seconds long. You can put together a long video by combining lots of short cuts, but “when you see these little bite-sized videos, it's a good sign that you need to take a breather.”

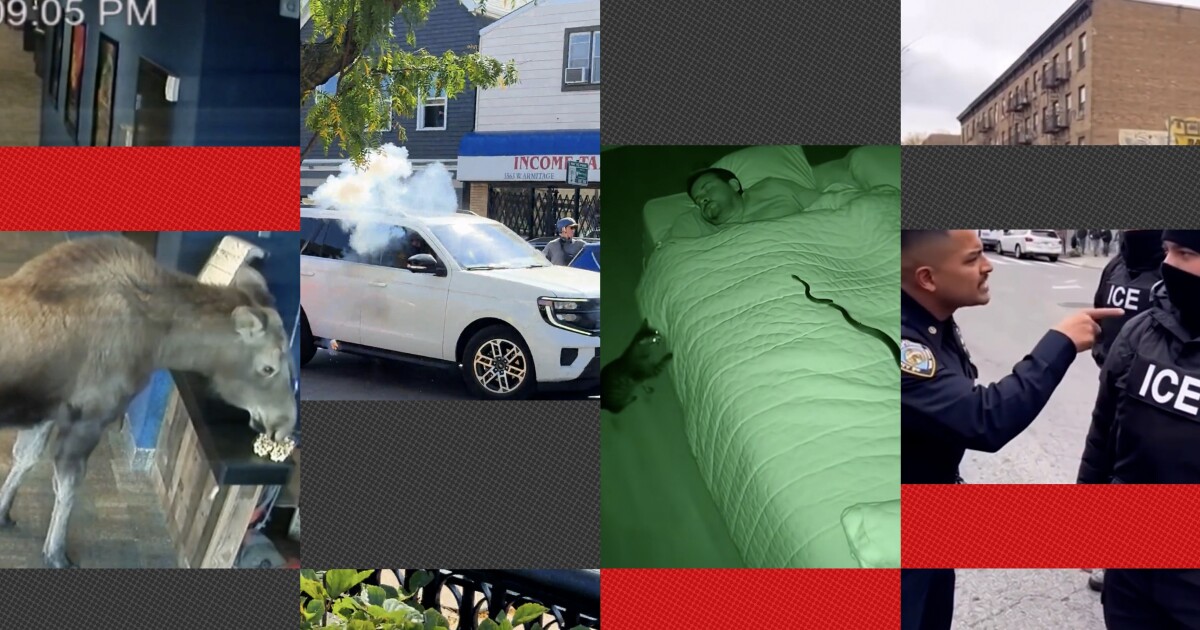

Length isn't the only thing that matters. Farid said AI-generated videos tend to frame the subject perfectly. The main characters are featured prominently in the video, and the action starts and stops cleanly, even if the video is short. That's one reason why the quiz video of a New York City police officer yelling at an ICE officer is clearly fake.

“It has a very professional look to it,” he said. Camera positions can also be odd. For example, are you too close to the subject of an ICE raid? Or are your movements too smooth to follow a running animal, as if you were on a gimbal? Those could be clues generated by AI.

Check the context

Caulfield said that while the nature of the video is important, where it is shared can sometimes be even more important.

Just looking at where the video was posted and looking at the comments can give you powerful clues. For example, the second video of an ICE raid in the quiz comes from a Reddit community in Chicago's Logan Square neighborhood.

Similarly, pay attention to the person who posted the video. If their feed contains content other than immigration raids, it lends credence to the idea that they witnessed a raid. “It may be easy to fake a video, but it's difficult to step into a time machine and create a 10-year history talking about Chicago hot dogs,” Caulfield said.

If you're not sure whether the video you're viewing was originally posted by the account you're viewing, try a simple reverse image search on Google or another platform. Such searches often return the original post, other videos of the same event, or news reports that confirm or deny the video. Both the Logan Square ICE attack and the moose eating popcorn incident were reported in the media at the time of the incident.

Conversely, identifying an AI video is often as simple as looking closely at the account that posted it. Koltai said it's common for an account's profile description to identify content as AI-generated. Even if you don't, if you check the comments, you may find that many people believe the video was generated by AI.

Especially if you have doubts, don't feel you need to share it

Finally, all three researchers agree that sharing is less important in an age when algorithms value speed over accuracy.

According to Koltai, much of the AI content shared online is bait for engagement. Its creators “often have a financial incentive to get users to like, comment, and share because it often results in more revenue,” she said.

When in doubt, Caulfield says it may be best to wait. “You don’t necessarily have to be the first to share this, you can be the one who waits,” he said. Video of the event is often confirmed by supporting video and news reports within hours.

While many people may think that sharing an AI video of a rabbit jumping on a trampoline or a cat snatching a snake from its owner's bed doesn't matter, experts agree it does. When people are fooled by AI videos, their trust in important videos is undermined.

“People are like, oh, is that really a big deal?” she said. But Koltai said everyone should be concerned. “What if you can't tell what's real and what's not online? That's really incredibly dangerous to me.”

Hany Farid agreed, saying, “Every like, every click, every share, every engagement, you're part of the problem at this point.”

Copyright 2025 NPR