Everyone talks about LLM, but today's AI ecosystem is much more than just language models. Behind the scenes, an entire family of specialized architectures is quietly transforming how machines perceive, plan, act on, partition, represent, and even efficiently execute concepts on small devices. Each of these models solves a different part of the intelligence puzzle, and together they form the next generation of AI systems.

This article describes five major players: Large Language Model (LLM), Vision Language Model (VLM), Mix of Expertise (MoE), Large Scale Action Model (LAM), and Small Language Model (SLM).

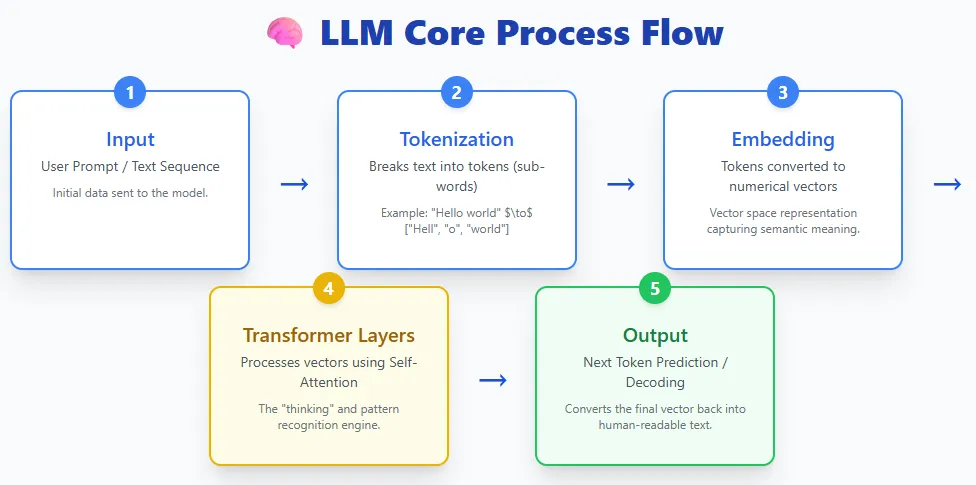

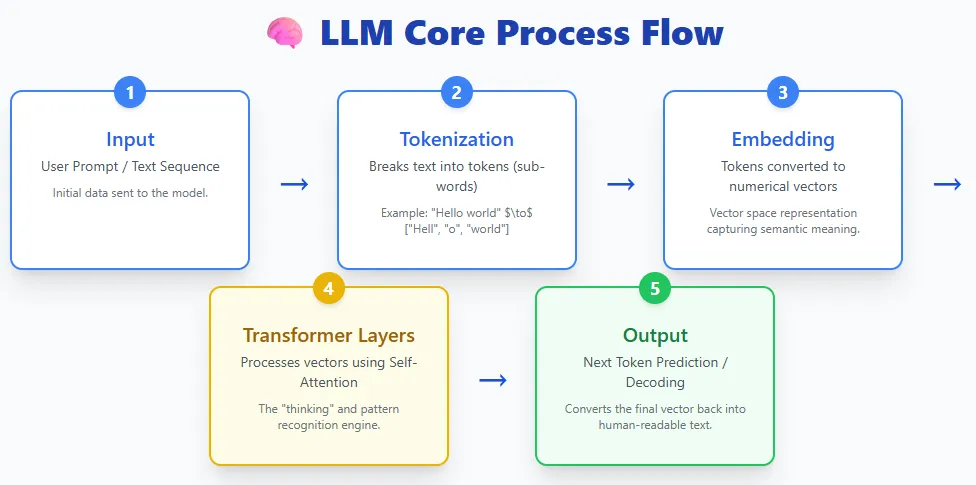

LLM takes in text, splits it into tokens, converts those tokens into embeddings, passes them to a layer of transformers, and generates text back. Models such as ChatGPT, Claude, Gemini, and Llama all follow this basic process.

At the heart of LLM is a deep learning model trained on large amounts of text data. This training will enable you to understand the language, generate responses, summarize information, write code, answer questions, and perform a wide range of tasks. They use a transformer architecture that is very good at handling long sequences and capturing complex patterns in languages.

LLM is now widely accessible through consumer tools and assistants, from OpenAI's ChatGPT and Anthropic's Claude to Meta's Llama model, Microsoft Copilot, and Google's Gemini and BERT/PaLM families. They are the foundation of modern AI applications due to their versatility and ease of use.

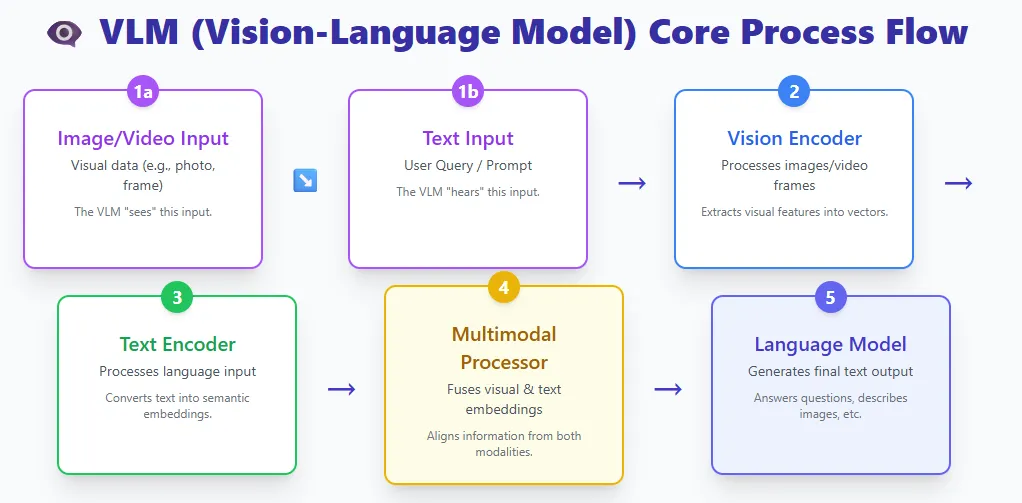

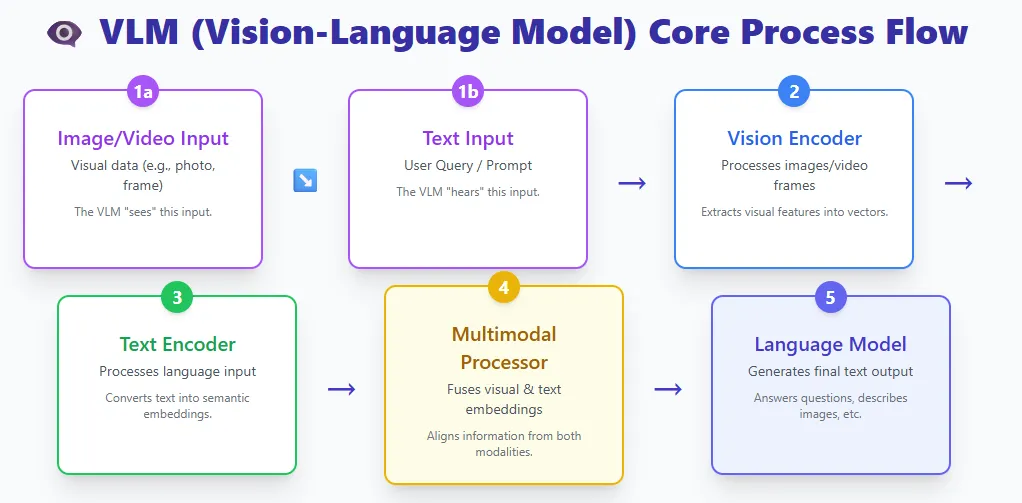

VLM combines two worlds.

- Vision encoder to process images or videos

- Text encoder that processes languages

Both streams meet in a multimodal processor and the language model produces the final output.

Examples include GPT-4V, Gemini Pro Vision, and LLaVA.

A VLM is essentially a large language model given the ability to see. By blending visual and textual representations, these models can understand images, interpret documents, answer questions about photos, explain videos, and more.

Traditional computer vision models are trained for one narrow task, such as classifying cats and dogs or extracting text from images, and cannot generalize beyond their training class. If you need new classes or tasks, you will have to retrain them from scratch.

VLM removes this limitation. Trained on huge datasets of images, videos, and text, they can perform many visual tasks in zero shots by simply following natural language instructions. Everything from image captioning and OCR to visual reasoning and multi-step document understanding can be done without task-specific retraining.

This flexibility makes VLM one of the most powerful advances in modern AI.

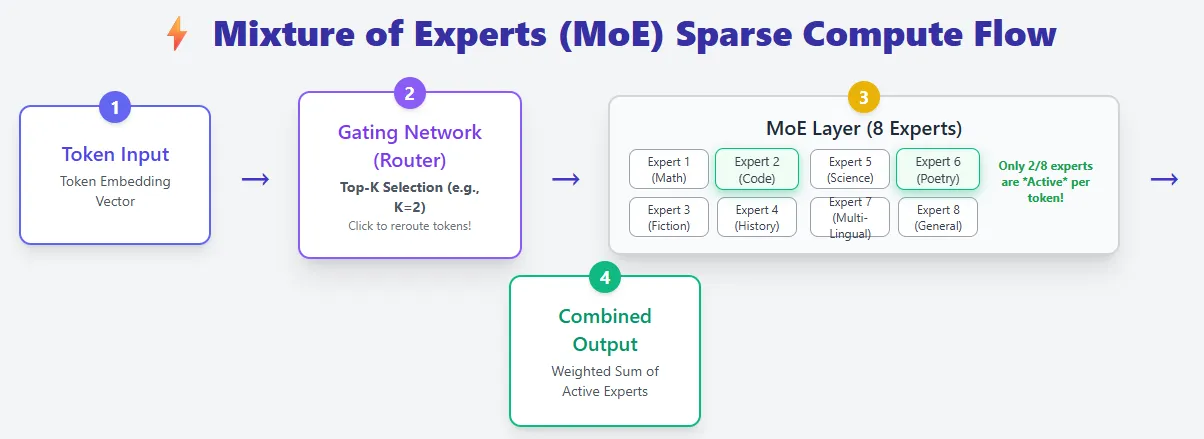

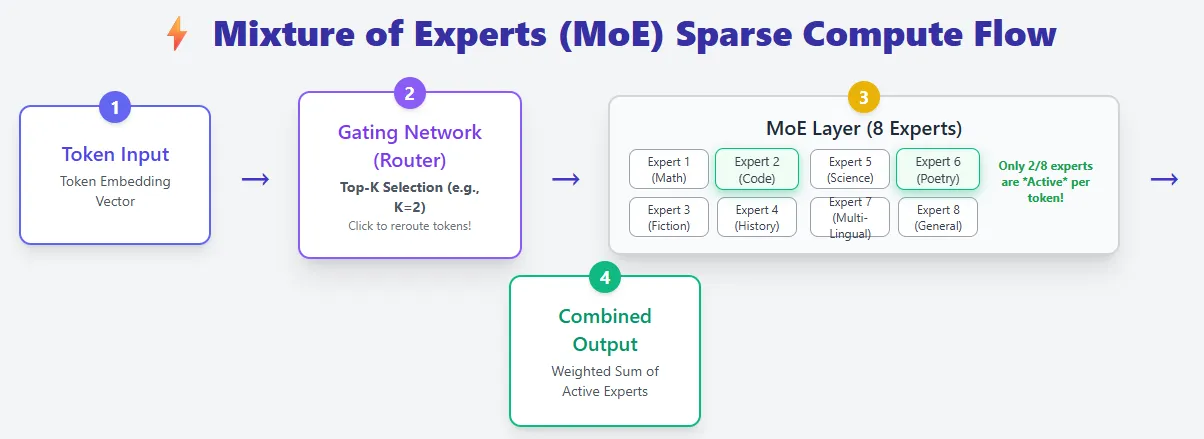

The expert mixture model is built on the standard Transformer architecture, but introduces important upgrades. Instead of one feedforward network per layer, we use many smaller expert networks and activate only a small number of expert networks per token. This makes the MoE model highly efficient while offering high capacity.

In a regular transformer, all tokens go through the same feedforward network. That is, all parameters are used for all tokens. The MoE layer replaces this with a pool of experts, and the router decides which expert will handle each token (top-K selection). As a result, a MoE model may contain a much larger number of total parameters, but only a small fraction of them can be computed at a time, making the computations sparse.

For example, Mixtral 8×7B has over 46B parameters, but each token uses only about 13B.

This design significantly reduces inference costs. Rather than scaling by making the model deeper or wider (which increases FLOPs), MoE models scale by adding more experts, increasing capacity without increasing compute per token. This is why MoE is said to have “bigger brains at lower runtime costs.”

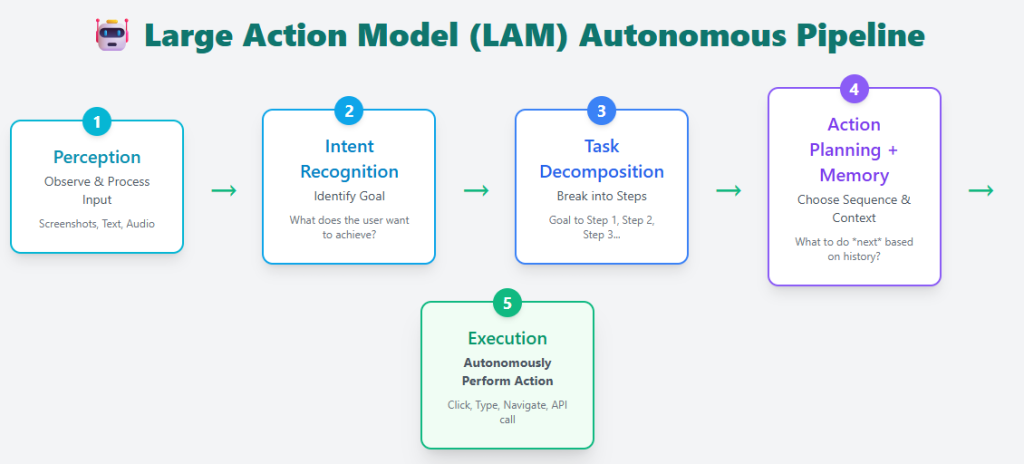

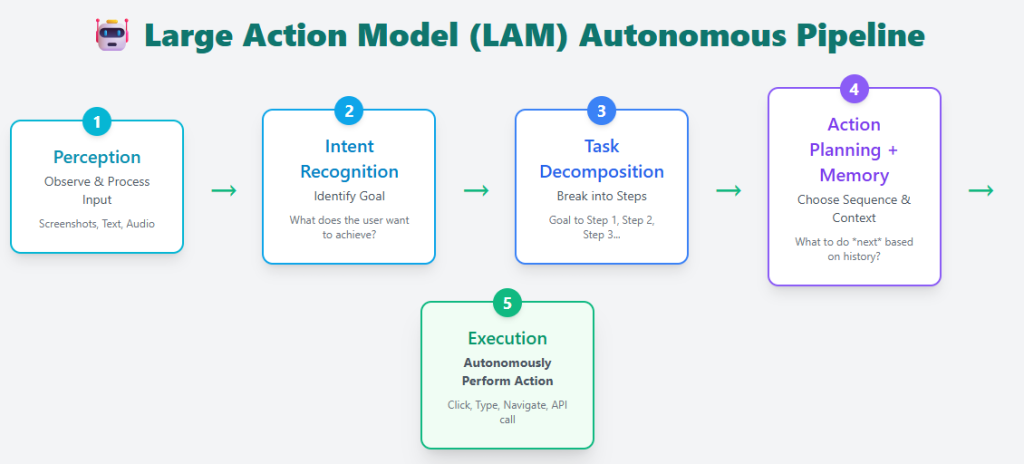

Large-scale action models go beyond text generation to turn intent into action. More than just answering questions, LAM can understand what the user wants, break down the task into steps, plan the necessary actions, and execute them in the real world or on a computer.

A typical LAM pipeline includes:

- Recognition – Understand user input

- Intent recognition – identify what the user is trying to accomplish

- Task decomposition – breaking down goals into actionable steps

- Action planning + memory – use past and current situations to choose the correct sequence of actions

- Execution – execute tasks autonomously

Examples include Rabbit R1, Microsoft's UFO framework, and Claude Computer Use. All of these can interact with the app, navigate the interface, and complete tasks on your behalf.

LAM is trained on a huge dataset of real user actions, giving it the ability to act rather than just respond, such as booking a room, filling out a form, organizing files, and executing multi-step workflows. This moves AI from a passive assistant to an active agent capable of making complex real-time decisions.

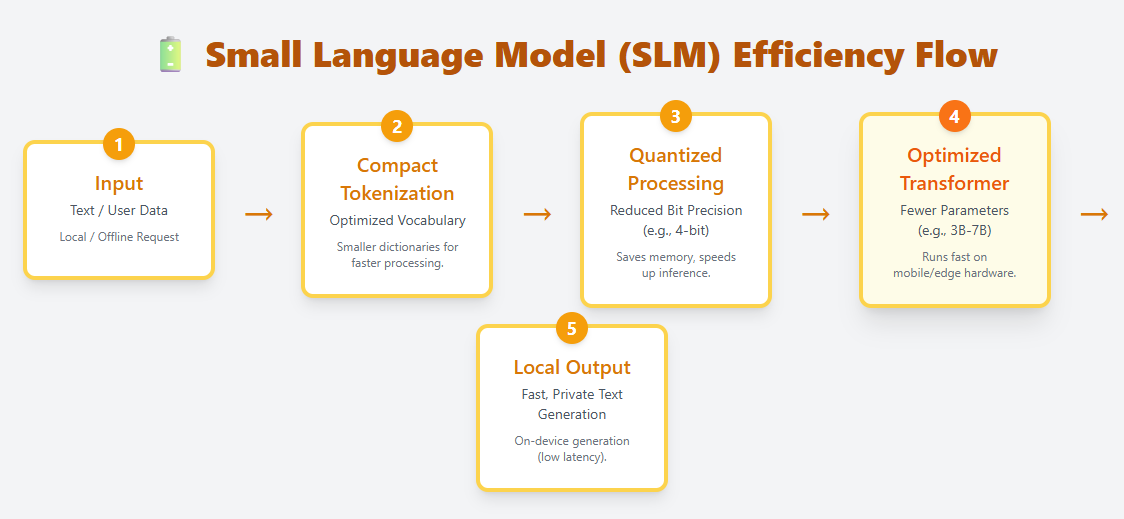

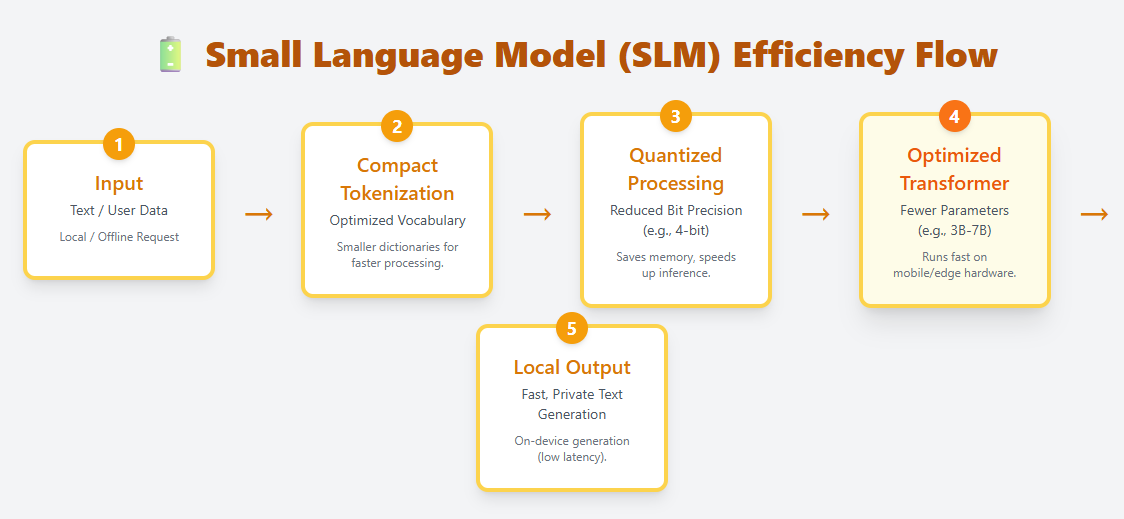

SLM is a lightweight language model designed to run efficiently on edge devices, mobile hardware, and other resource-constrained environments. Enables local on-device deployment using compact tokenization, optimized transformer layers, and aggressive quantization. Examples include Phi-3, Gemma, Mistral 7B, and Llama 3.2 1B.

Unlike LLM, which has hundreds of billions of parameters, SLM typically ranges from millions to billions. Despite its small size, it can understand and generate natural language, making it useful for chatting, summarizing, translating, and automating tasks without the need for cloud computing.

SLM requires much less memory and compute, making it ideal for:

- mobile app

- IoT and edge devices

- Offline or privacy sensitive scenarios

- Low-latency applications where cloud calls are too slow

SLM represents a growing shift toward fast, private, and cost-effective AI, bringing linguistic intelligence directly to personal devices.

I am a Civil Engineering graduate from Jamia Millia Islamia, New Delhi (2022) and have a strong interest in data science, especially neural networks and their applications in various fields.